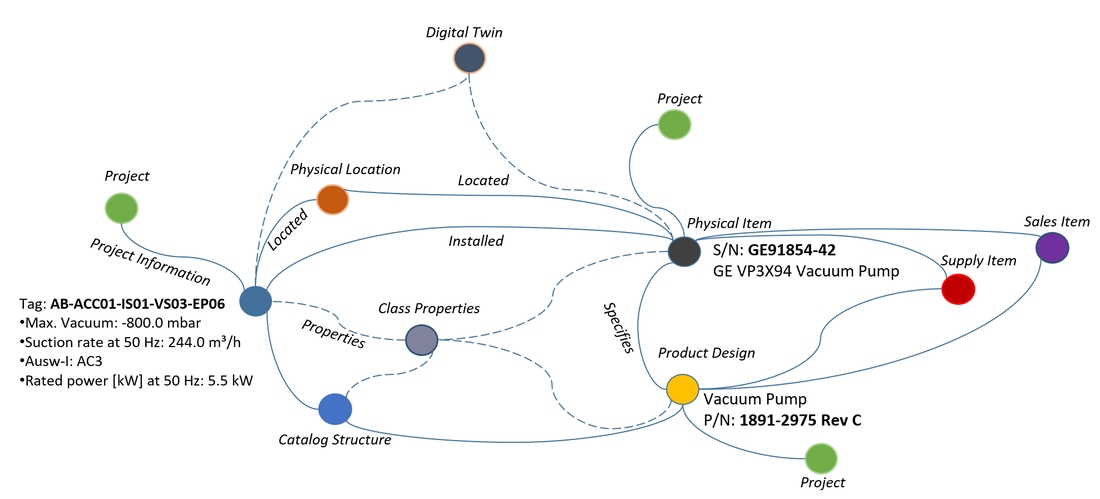

I was challenged the other day to explain the connection between Big Data and PLM by a former colleague. The connection might not be immediately apparent if your viewpoint is from traditional Product Lifecycle Management systems which primarily has to do with managing the design and engineering data of a product or plant/facility.

However, if we first take a look at a definition of Product Lifecycle Management from Wikipedia:

“In industry, product lifecycle management (PLM) is the process of managing the entire lifecycle of a product from inception, through engineering design and manufacture, to service and disposal of manufactured products. PLM integrates people, data, processes and business systems and provides a product information backbone for companies and their extended enterprise.”

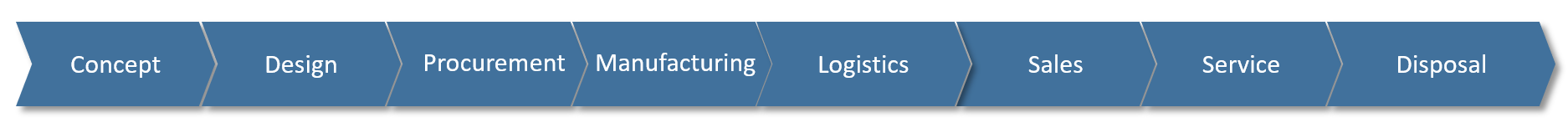

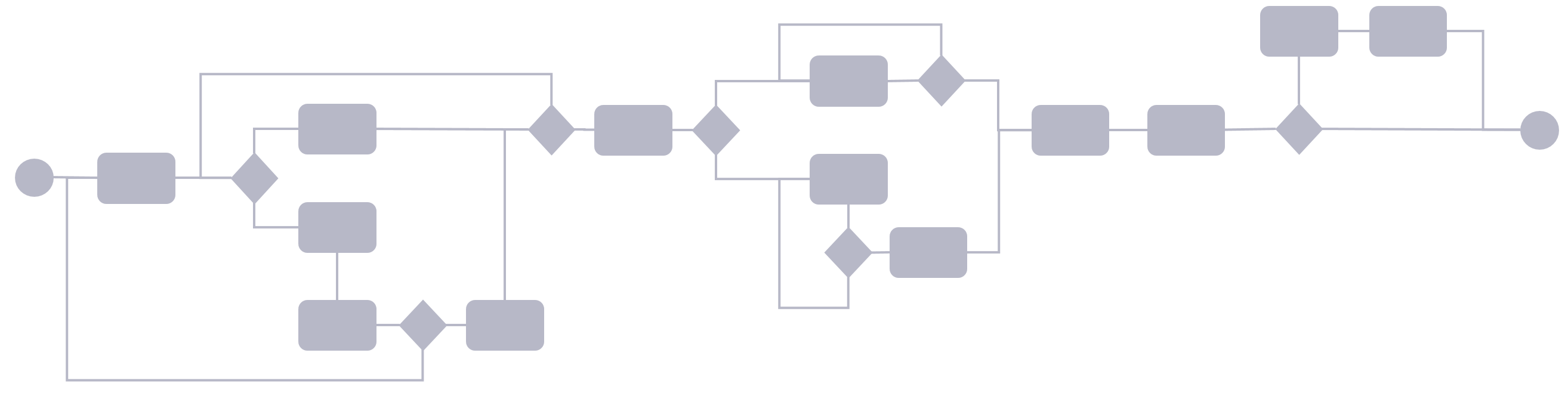

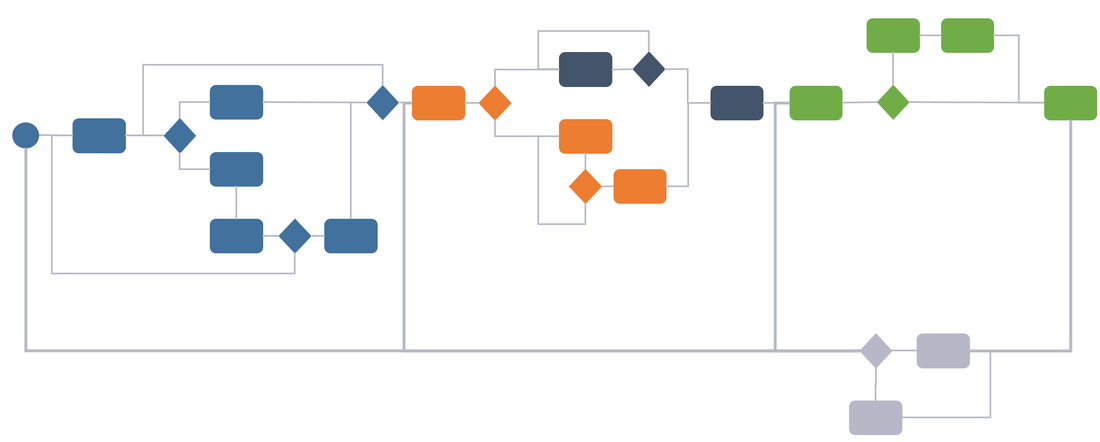

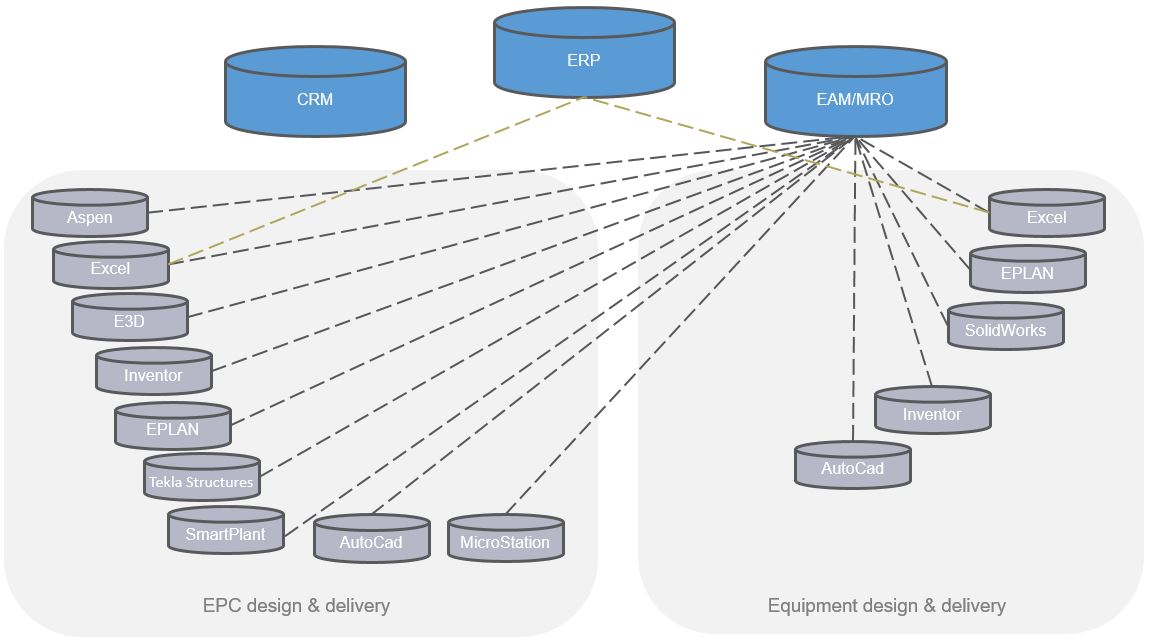

Traditionally it has looked much like this

However, if we first take a look at a definition of Product Lifecycle Management from Wikipedia:

“In industry, product lifecycle management (PLM) is the process of managing the entire lifecycle of a product from inception, through engineering design and manufacture, to service and disposal of manufactured products. PLM integrates people, data, processes and business systems and provides a product information backbone for companies and their extended enterprise.”

Traditionally it has looked much like this

Then let’s look at a definition of Big Data

“Big data is data sets that are so voluminous and complex that traditional data processing application software are inadequate to deal with them. Big data challenges include capturing data, data storage, data analysis, search, sharing, transfer, visualization, querying, updating and information privacy. There are three dimensions to big data known as Volume, Variety and Velocity.

Lately, the term "big data" tends to refer to the use of predictive analytics, user behavior analytics, or certain other advanced data analytics methods that extract value from data, and seldom to a particular size of data set. "There is little doubt that the quantities of data now available are indeed large, but that’s not the most relevant characteristic of this new data ecosystem." Analysis of data sets can find new correlations to "spot business trends, prevent diseases, combat crime and so on.”

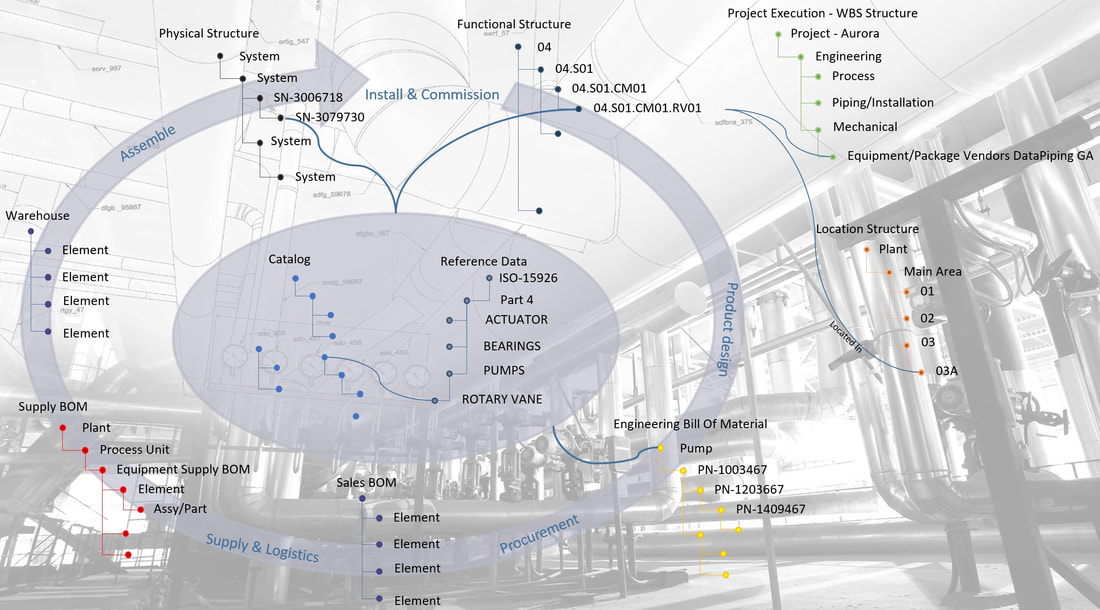

Included in Big Data you’ll find data sets harvested from sensors within all sorts of equipment and products as well as data fed back from software running within products. One can say that a portion of Big Data is the resulting feedback from the Internet of Things. Data in itself is not of any value whatsoever, but if the data can be analyzed to reveal meaning, trends or knowledge about how a product is used by different customer segments then it has tremendous value to product manufacturers.

If we take a look at the operational phase of a product, and by that, I mean everything that happens from manufactured product to disposal, then any manufacturer would like to get their hands on such data, either to improve the product itself or sell services associated with it. Such services could be anything from utilizing the product as a platform for an ecosystem of connected products to new business models where the product itself is not the key but rather the service it provides. You might sell guaranteed uptime or availability provided that the customer also buys into your service program for instance.

The resulting analysis of the data should in my view be managed by, or at least serve as input to the product definition because the knowledge gleamed from all the analytics of Big Data sets ultimately impacts the product definition itself since it should lead to revised product designs that fulfills the customer needs better. It might also lead to the revelation that it would be better to split a product in two different designs going after two distinct end user behavior categories found as a result of data analysis from the operational phase of the products.

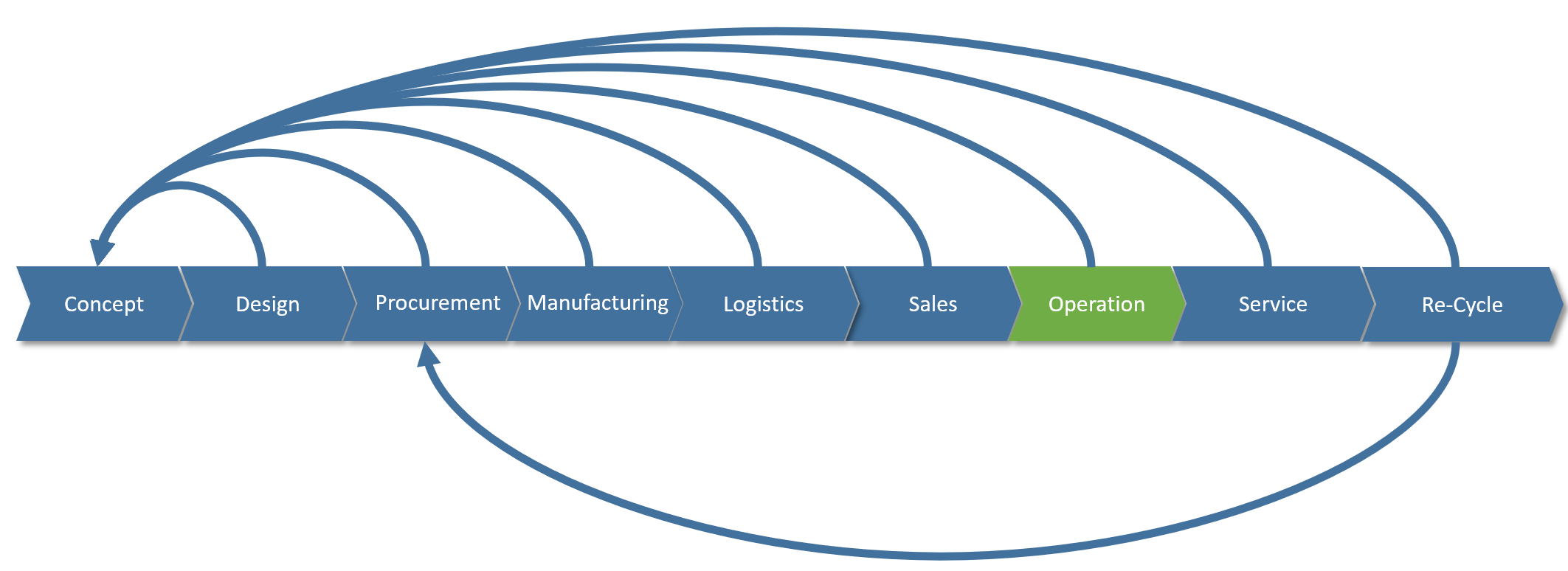

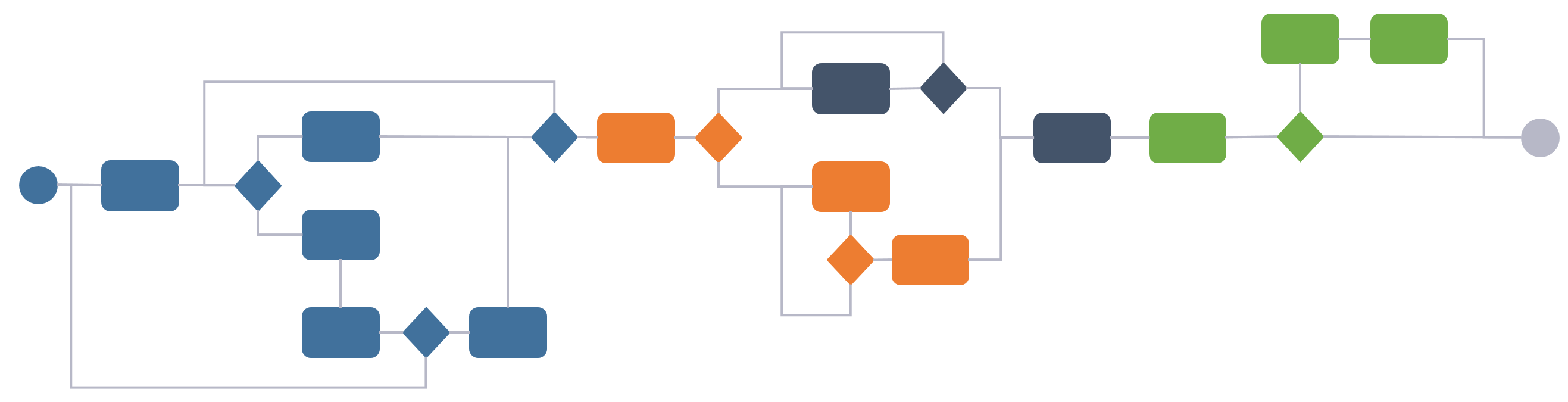

Connected products, Big Data and analysis will to a far greater extent than before allow us to do the following instead:

“Big data is data sets that are so voluminous and complex that traditional data processing application software are inadequate to deal with them. Big data challenges include capturing data, data storage, data analysis, search, sharing, transfer, visualization, querying, updating and information privacy. There are three dimensions to big data known as Volume, Variety and Velocity.

Lately, the term "big data" tends to refer to the use of predictive analytics, user behavior analytics, or certain other advanced data analytics methods that extract value from data, and seldom to a particular size of data set. "There is little doubt that the quantities of data now available are indeed large, but that’s not the most relevant characteristic of this new data ecosystem." Analysis of data sets can find new correlations to "spot business trends, prevent diseases, combat crime and so on.”

Included in Big Data you’ll find data sets harvested from sensors within all sorts of equipment and products as well as data fed back from software running within products. One can say that a portion of Big Data is the resulting feedback from the Internet of Things. Data in itself is not of any value whatsoever, but if the data can be analyzed to reveal meaning, trends or knowledge about how a product is used by different customer segments then it has tremendous value to product manufacturers.

If we take a look at the operational phase of a product, and by that, I mean everything that happens from manufactured product to disposal, then any manufacturer would like to get their hands on such data, either to improve the product itself or sell services associated with it. Such services could be anything from utilizing the product as a platform for an ecosystem of connected products to new business models where the product itself is not the key but rather the service it provides. You might sell guaranteed uptime or availability provided that the customer also buys into your service program for instance.

The resulting analysis of the data should in my view be managed by, or at least serve as input to the product definition because the knowledge gleamed from all the analytics of Big Data sets ultimately impacts the product definition itself since it should lead to revised product designs that fulfills the customer needs better. It might also lead to the revelation that it would be better to split a product in two different designs going after two distinct end user behavior categories found as a result of data analysis from the operational phase of the products.

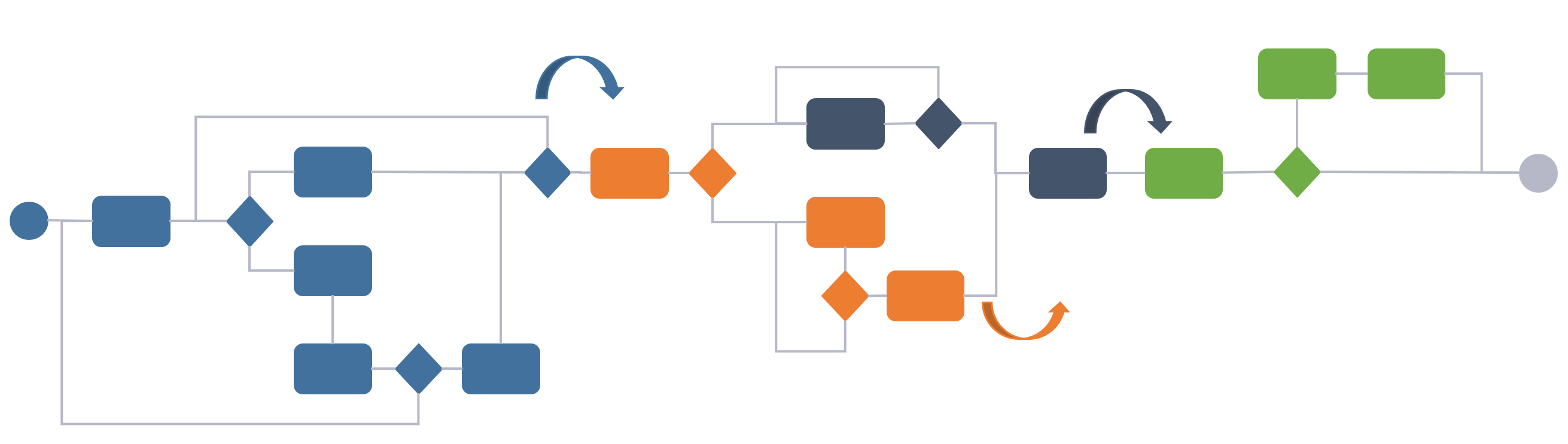

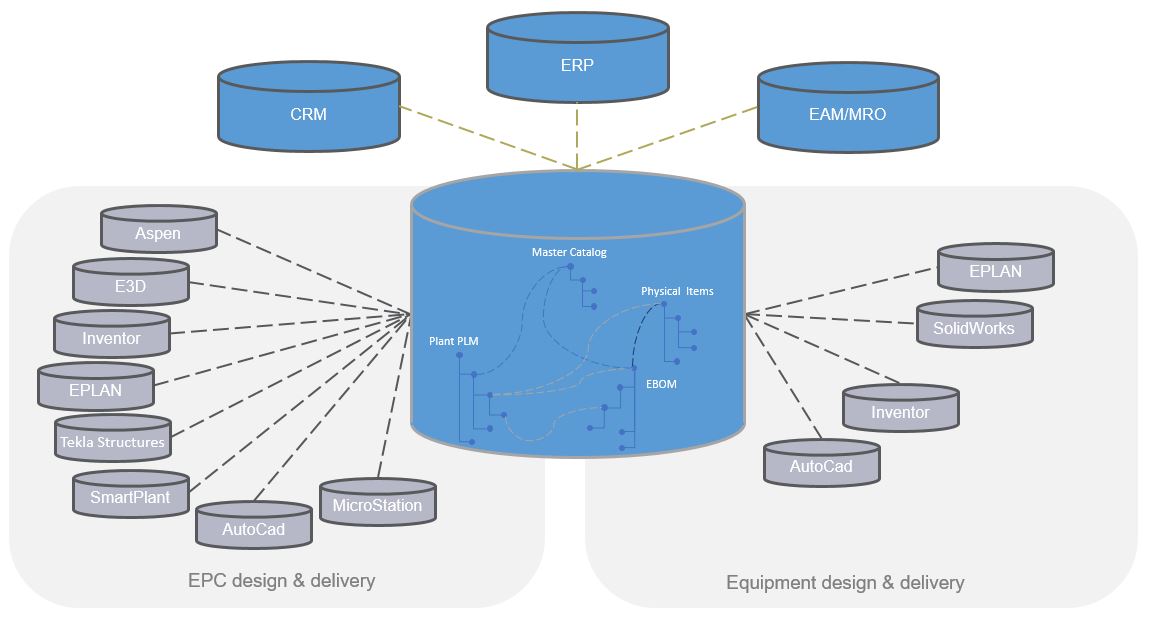

Connected products, Big Data and analysis will to a far greater extent than before allow us to do the following instead:

It will mean that experience throughout the full lifecycle can be made available to develop better products, tailor to new end user behavior trends and create new business models.

Note: the image above focuses on the feedback loops to product engineering, but such feedback loops should also be made available from for instance service and operation to manufacturing.

Most companies I work with tell me that the feedback loops described in the image above is either too poor, or virtually nonexistent. Furthermore, they all say that such feedback loops are becoming vital for their survival as more and more of their revenue comes from services after a product sale and not from the product sale itself. This means that it is imperative for them to have as much reliable and analyzed data as possible about their products performance in the field, how their customers are actually using them and how they are maintained.

For these companies at least, the connection between Big Data analysis and its impact on Product Lifecycle Management is becoming clearer and clearer.

Bjorn Fidjeland

The header image used in this post is by garrykillian and purchased at dreamstime.com

Note: the image above focuses on the feedback loops to product engineering, but such feedback loops should also be made available from for instance service and operation to manufacturing.

Most companies I work with tell me that the feedback loops described in the image above is either too poor, or virtually nonexistent. Furthermore, they all say that such feedback loops are becoming vital for their survival as more and more of their revenue comes from services after a product sale and not from the product sale itself. This means that it is imperative for them to have as much reliable and analyzed data as possible about their products performance in the field, how their customers are actually using them and how they are maintained.

For these companies at least, the connection between Big Data analysis and its impact on Product Lifecycle Management is becoming clearer and clearer.

Bjorn Fidjeland

The header image used in this post is by garrykillian and purchased at dreamstime.com

RSS Feed

RSS Feed