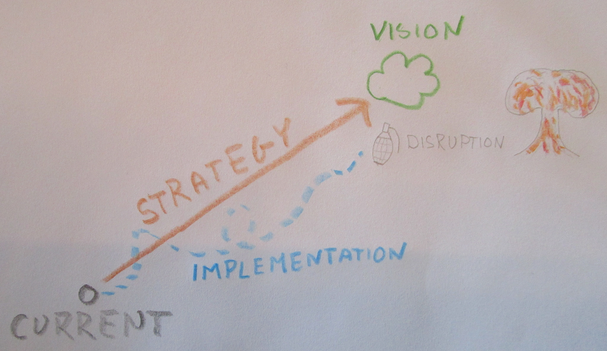

| Such a vision should serve as a guiding star. This is true for full corporate visions as well as for smaller areas of the enterprise. It could be for our product lifecycle management and the internet of things, how we see ourselves benefitting from big data, or harnessing virtual design and construction. |

The strategy is our plan for how we intend to get to the promised land of our vision. It should be detailed enough to make sense of what kind of steps we need to take to reach our vision, but beware:

Don’t make it too detailed, the first casualty of any battle is the battle plan.

Prepare, but allow for adjustments as maturity and experience grows.

The strategy is our plan for how we intend to get to the promised land of our vision. It should be detailed enough to make sense of what kind of steps we need to take to reach our vision, but beware:

Don’t make it too detailed, the first casualty of any battle is the battle plan.

Prepare, but allow for adjustments as maturity and experience grows.

An important part of the strategy work is to acid test the vision itself. Does it make sense, will it benefit us as a company and if so, HOW.

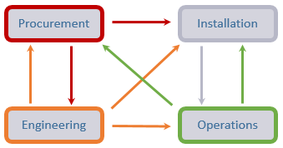

During the strategy work, all stakeholders should be consulted. And yes both internal and external stakeholders. The greatest vision in the world with a good strategy and top notch internal implementation won’t help much if you have external dependencies that cannot deliver what you need according to your new way of working…..

Business processes will simply come grinding to a halt.

During the strategy work, all stakeholders should be consulted. And yes both internal and external stakeholders. The greatest vision in the world with a good strategy and top notch internal implementation won’t help much if you have external dependencies that cannot deliver what you need according to your new way of working…..

Business processes will simply come grinding to a halt.

The implementation rarely takes the same path as the strategy intended it to do. There are usually many reasons for this.

My best advice regarding implementation is to take it one step at a time and evaluate each step. Try to deliver some real business value in each step, and align the result towards vision and strategy. Such an approach will give you the possibility to respond to changing business needs as well as external threats.

But as we come closer to the promised land of our vision, we can simply sense the feel of it and can almost touch it…. Then, disruption happens........

My best advice regarding implementation is to take it one step at a time and evaluate each step. Try to deliver some real business value in each step, and align the result towards vision and strategy. Such an approach will give you the possibility to respond to changing business needs as well as external threats.

But as we come closer to the promised land of our vision, we can simply sense the feel of it and can almost touch it…. Then, disruption happens........

Such a disruption might present itself in the form of a merger and acquisition where vision, strategy and also implementation must be re-evaluated.

The “new” company might have entered another industry where strategy and processes need to be different and old technical solutions won’t fit.

Today everybody seems to be talking about disruptive technology and or disruptive companies. Most companies acknowledge that there is a real risk that it will happen, but the problem is, since most disruptive companies haven’t even been formed yet, it is difficult to identify where the attack will come from and in what form.

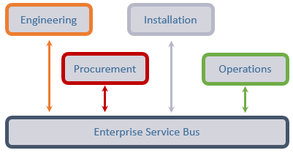

This leads back to vision, strategy and implementation. Vision and strategy can be changed quickly, but unless the implementation is flexible enough and able to respond to changing needs, the company will be unable to respond quick enough to meet the disruption.

Bjorn Fidjeland

The header image used in this post is by Skypixel and purchased at dreamstime.com

The “new” company might have entered another industry where strategy and processes need to be different and old technical solutions won’t fit.

Today everybody seems to be talking about disruptive technology and or disruptive companies. Most companies acknowledge that there is a real risk that it will happen, but the problem is, since most disruptive companies haven’t even been formed yet, it is difficult to identify where the attack will come from and in what form.

This leads back to vision, strategy and implementation. Vision and strategy can be changed quickly, but unless the implementation is flexible enough and able to respond to changing needs, the company will be unable to respond quick enough to meet the disruption.

Bjorn Fidjeland

The header image used in this post is by Skypixel and purchased at dreamstime.com

RSS Feed

RSS Feed