What does all of this have to do with the digital twin? - Let's have a look.

The term and concept of a digital twin was first coined by Michael Grieves at the University of Michigan in 2002, but has since taken on a life of its own in different companies.

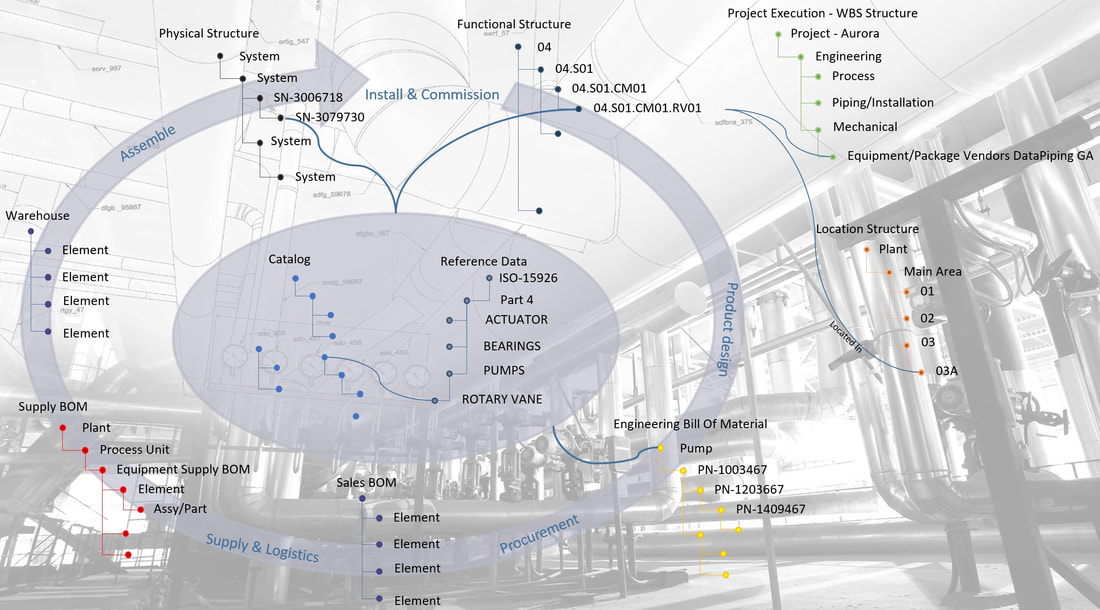

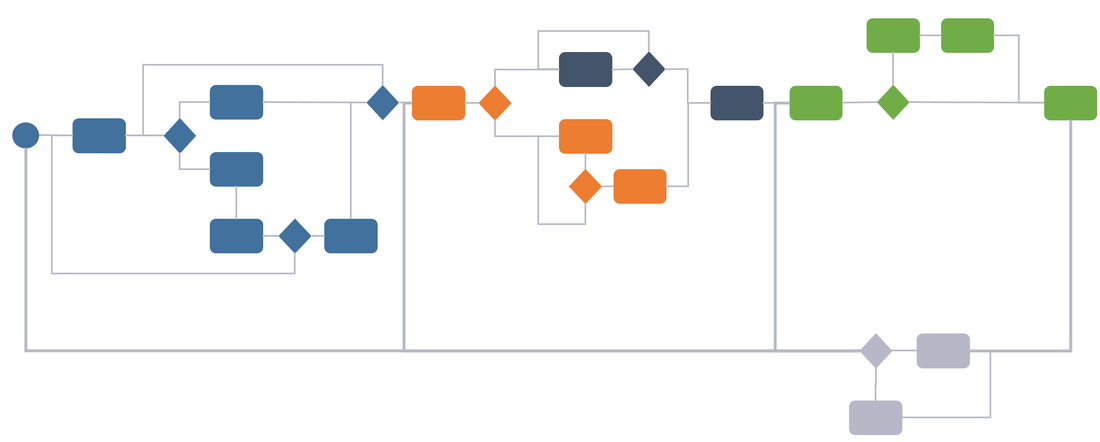

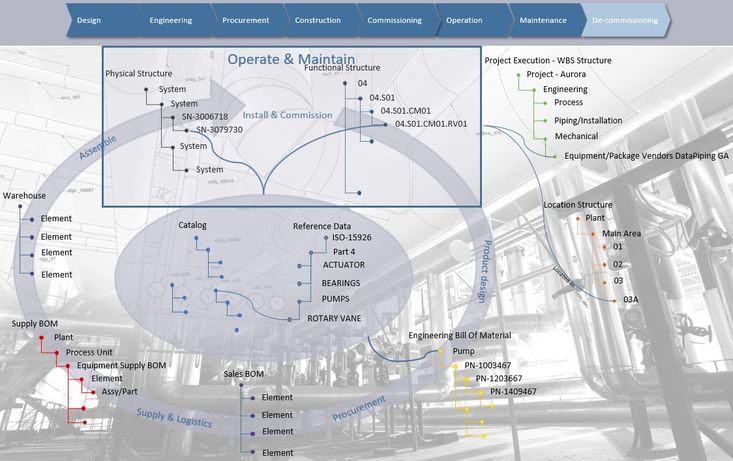

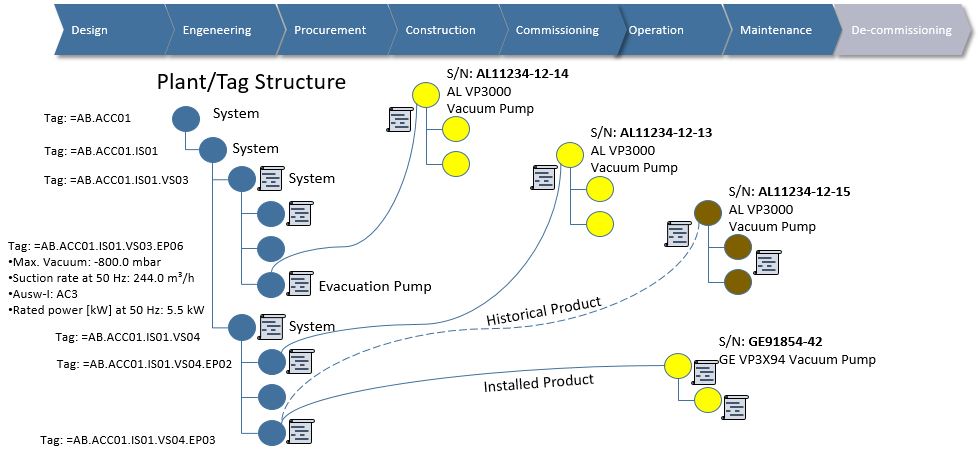

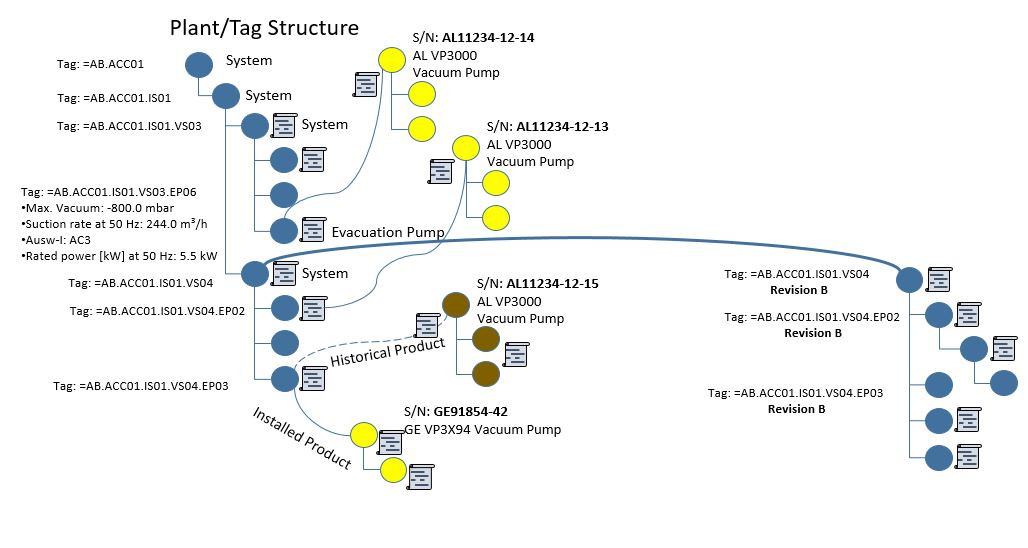

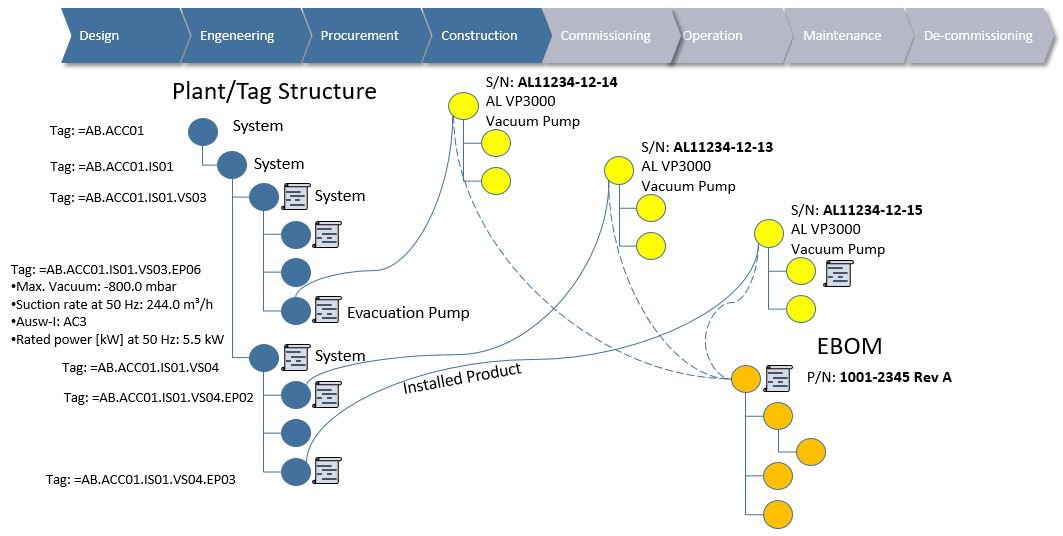

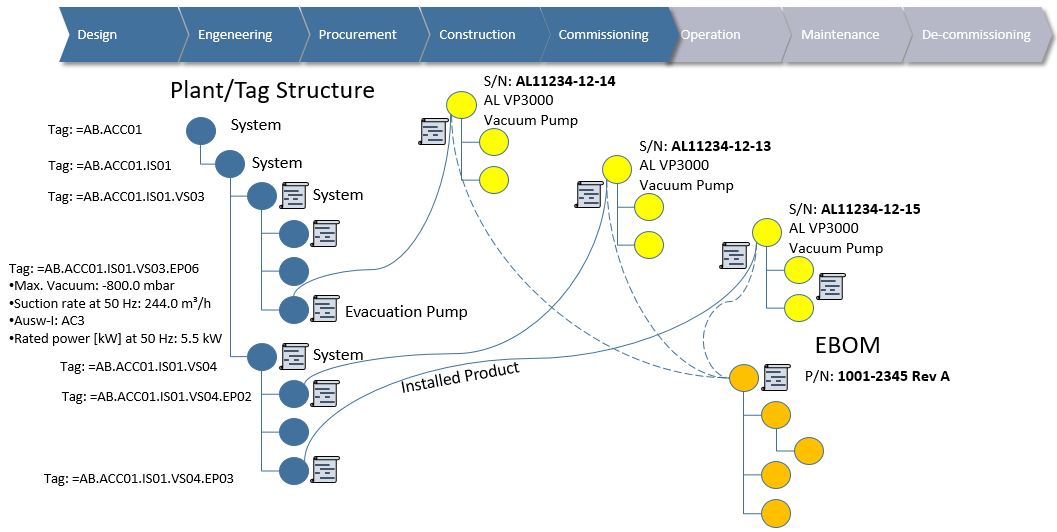

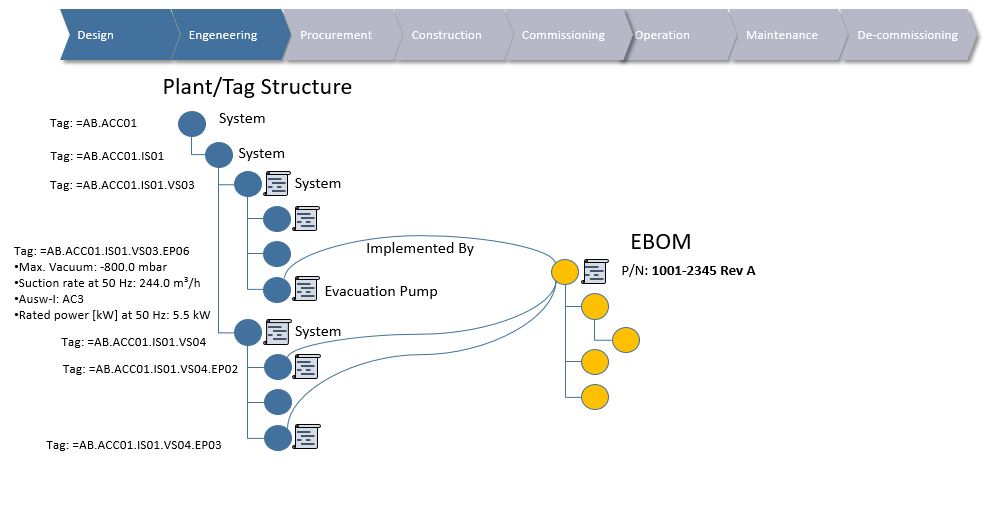

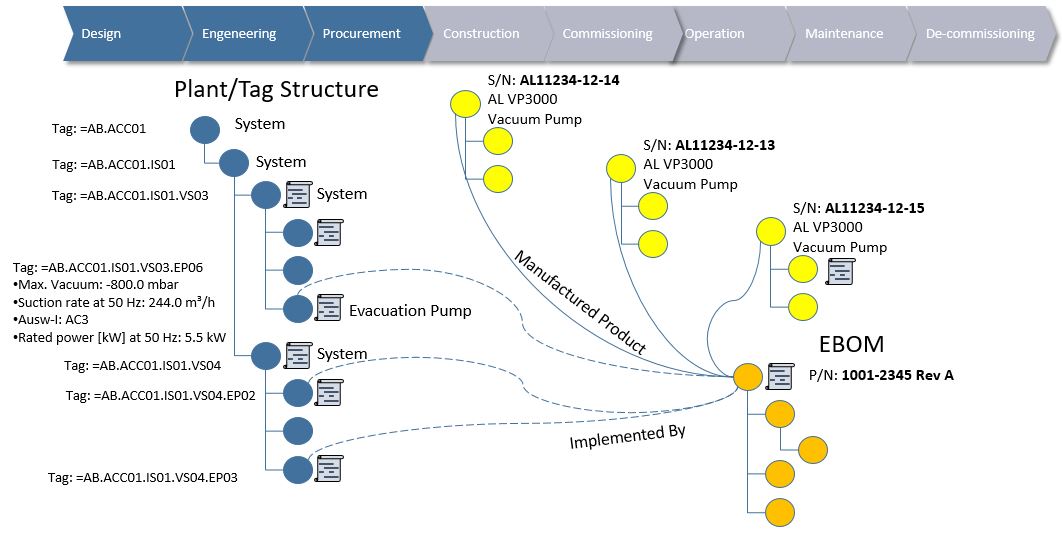

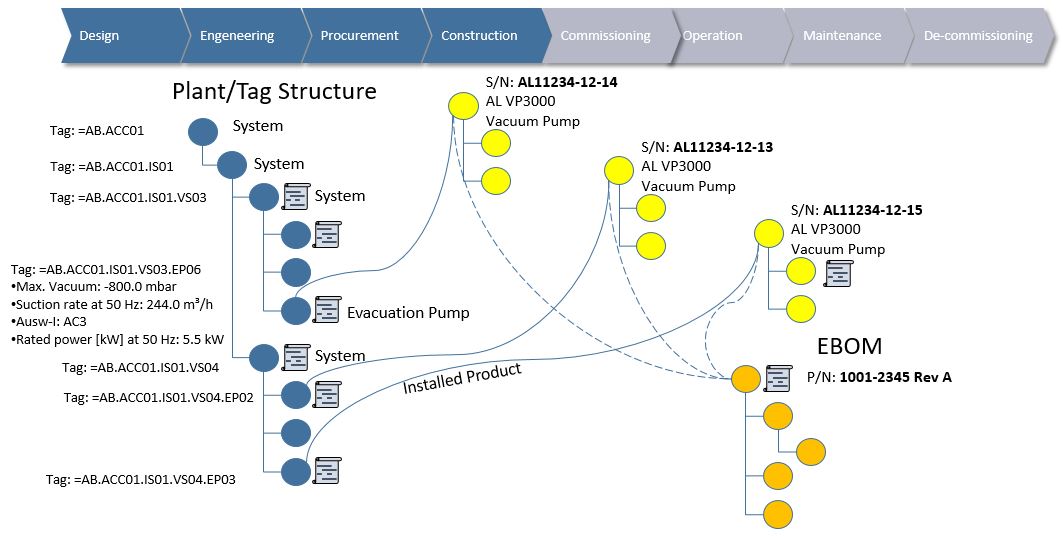

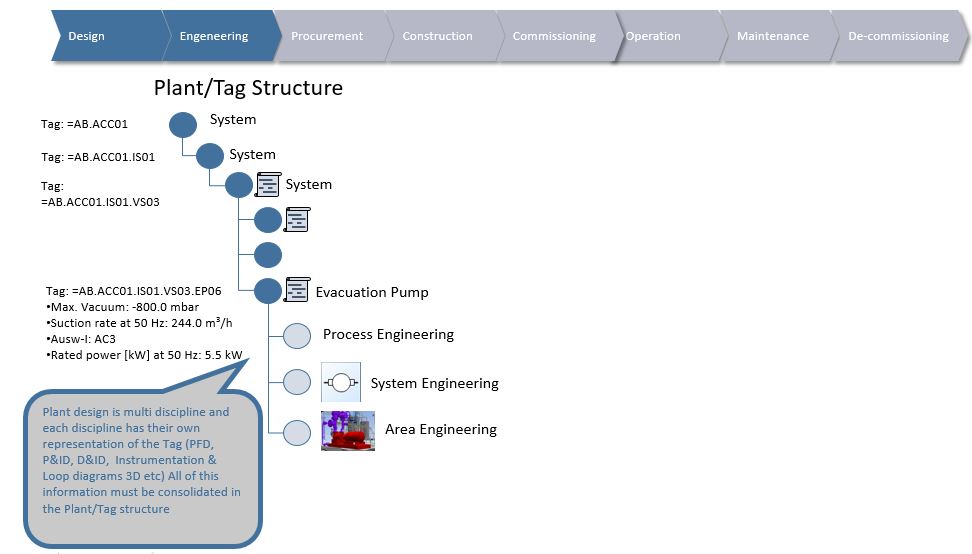

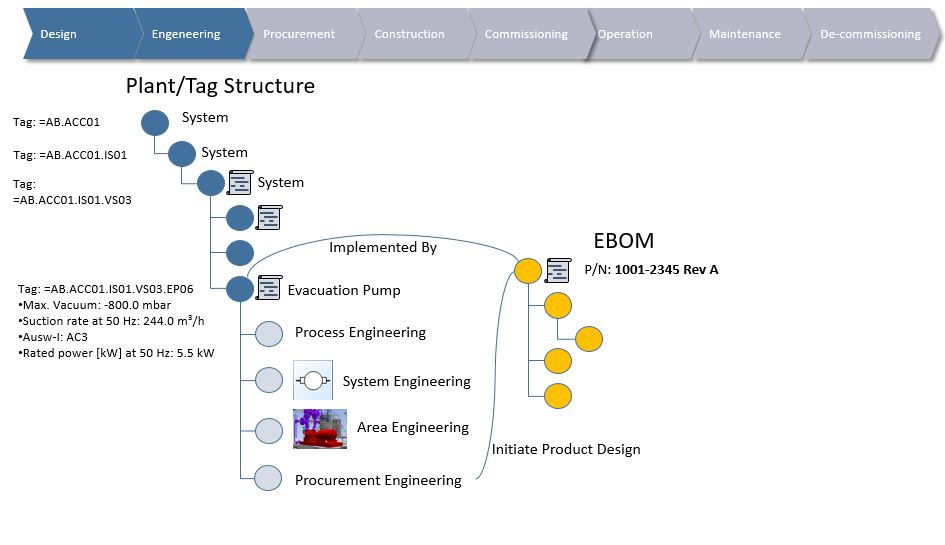

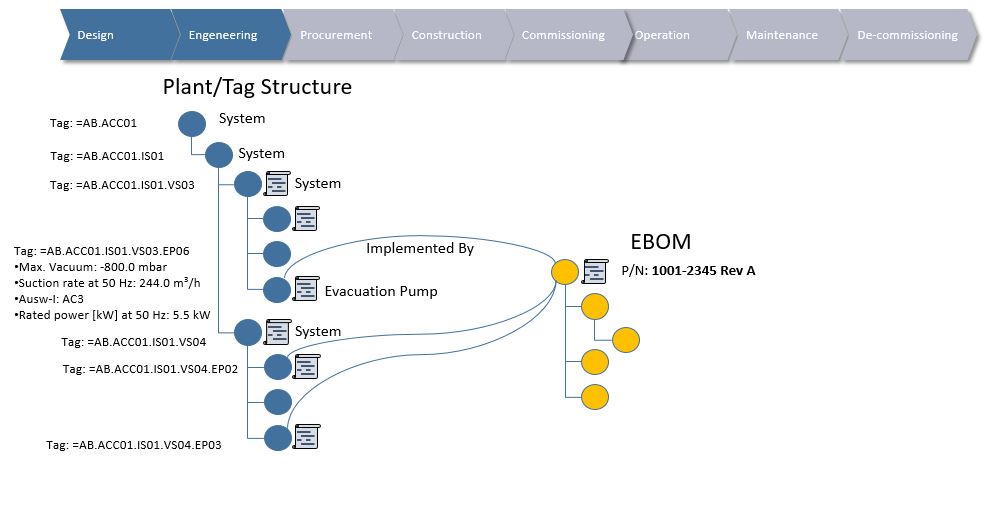

Below is an example of what information can be accessed from a digital twin or rather what the digital twin can serve as an entry point for:

On the other hand, if you have a 3D representation, it can become a front end used by end users for finding, searching and analyzing all connected information from the data structures described in my previous blog posts. Such insights takes us to a whole new level of understanding of each delivered products life, their challenges and opportunities in different environments and the way they are actually being used by end customers.

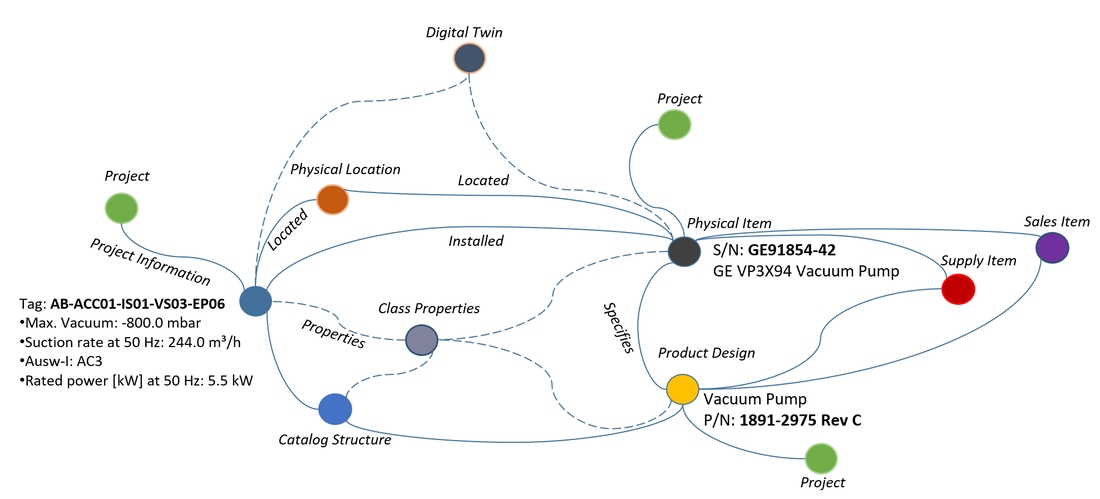

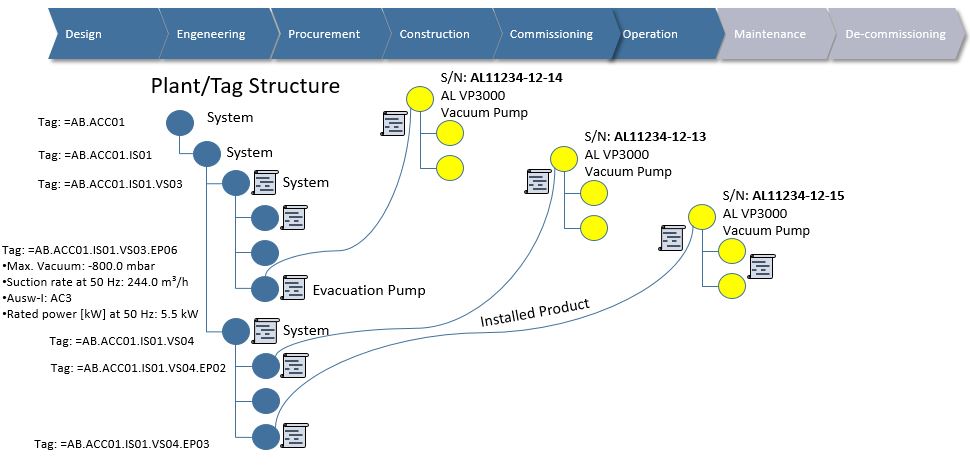

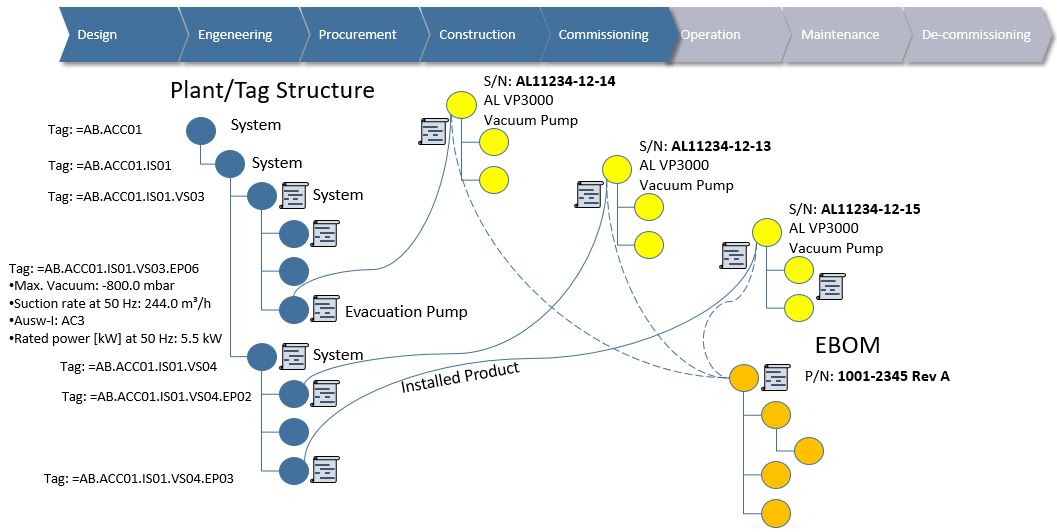

Let’s say that we via the digital twin in the figure above select a pump. The tag of that pump uniquely identifies the functional location in the facility. An end user can pull information from the system the pump belongs to in the form of a parametric Piping & Instrumentation Diagram (P&ID), the functional specification for the pump in the designed system, information about the actually installed pump with serial number, manufacturing information, supplier, certificates, performed installation & commissioning procedures and actual operational data of the pump itself.

The real power in the operational phase becomes evident when operational data is associated with each delivered pump. In such a case the operational data can be compared with environmental conditions the physical equipment operates in. Let’s say that the fluid being pumped contains more and more sediments, and our historical records of similar conditions tells us that the pump will likely fail during the next ten days due to wear and tear of critical components. However, it is also indicated that if we reduce the power by 5 percent we will be able to operate the full system until the next scheduled maintenance window in 15 days. Information like that gives real business value in terms of increased uptime.

Let’s look at some other possibilities.

If we now consider a full facility with a three-dimensional representation:

During the EPC phase it is possible to associate the 3D model with a fourth dimension, time, turning it into a 4D model. By doing so, the model can be used to analyze and validate different installation execution plans, or monitor the actual ongoing installation of the Facility. We can actually see the individual parts of the model appearing as time progresses.

A fifth dimension can also be added, namely cost. Here the cost development over time according to one or several proposed installation execution plans or the actual installation itself can be analyzed or monitored.

This is already being done by some early movers in the construction industry where it is referred to as 5D or Virtual Design & Construction.

The model can also serve as an important asset when planning and coordinating space claims made by different disciplines during the design as well as during the actual installation. It can easily give visual feedback if there is a conflict between space claims made by electrical engineering and mechanical engineering, or if there is a conflict in the installation execution plan in terms of planned access by different working crews.

More and more companies are also making use of laser scanning in order to get an accurate 3D model of what has been actually installed so far. This model can easily be compared with the design model to see if there are any deviations. If deviations are found, they can be acted upon by analyzing how it will impact the overall system if it is left as it is, or will it require re-design? Does the decision to leave it as it is change the performance of the overall system? Are we still able to perform the rest of the installation, due to less available space?

Answers to these questions might entail that we will have to dismantle the parts of the system that has deviations. It is however a lot better and cost effective to identify such problems as early as possible.

This is just great, right? Such insights as mentioned would have huge impacts on how EPC’s manage their projects, operators run their plants and how product vendors can operate or service their equipment in the field, as well as feeding information back to engineering to make better products.

(Power-by-the-Hour is a trademark of Rolls-Royce, although the concept itself is 50 years old you can read about a more recent development here)

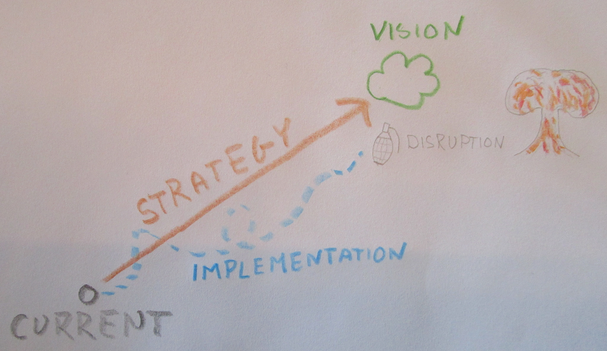

So why haven’t more companies already done it?

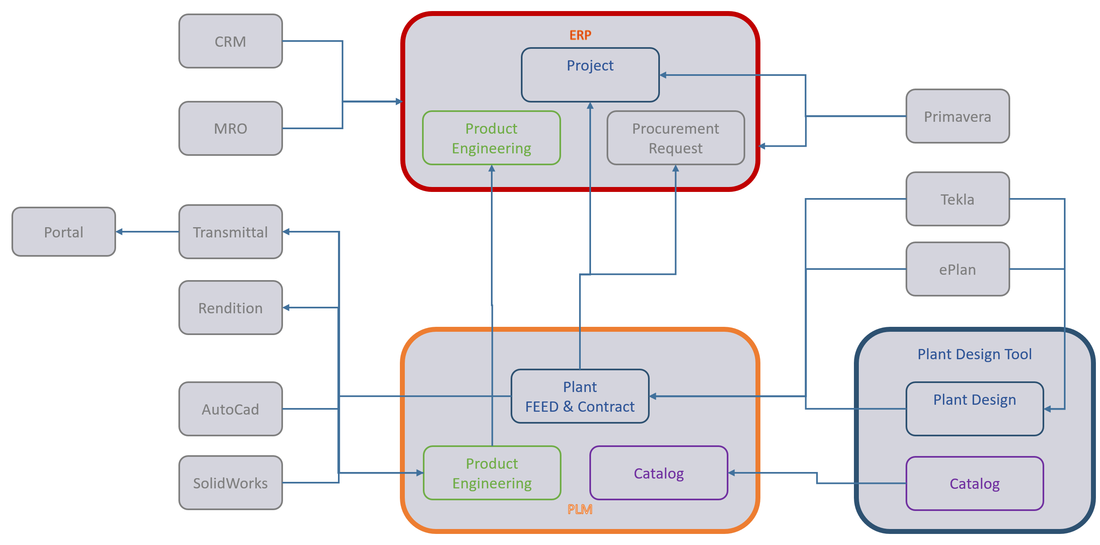

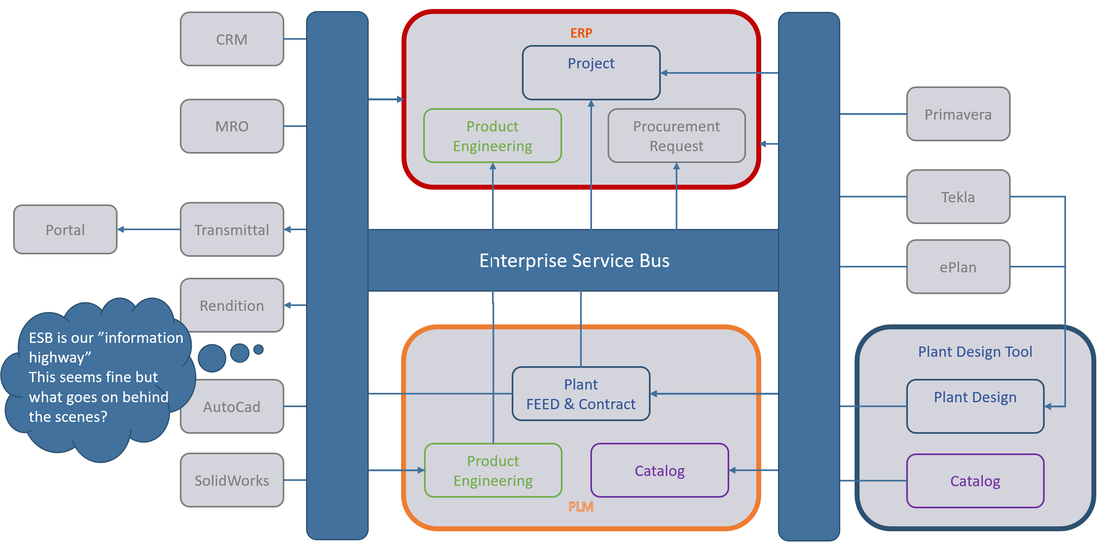

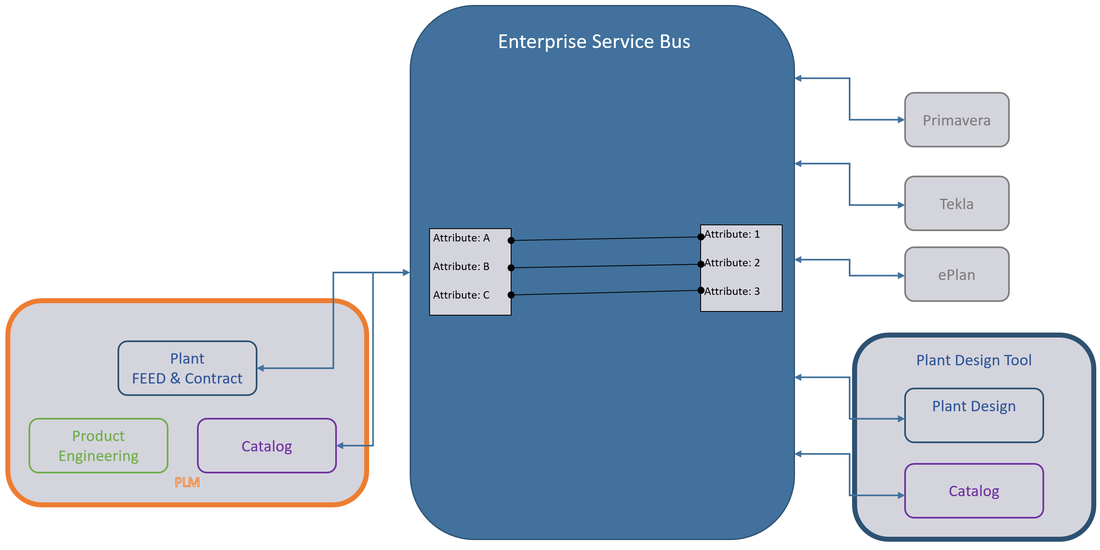

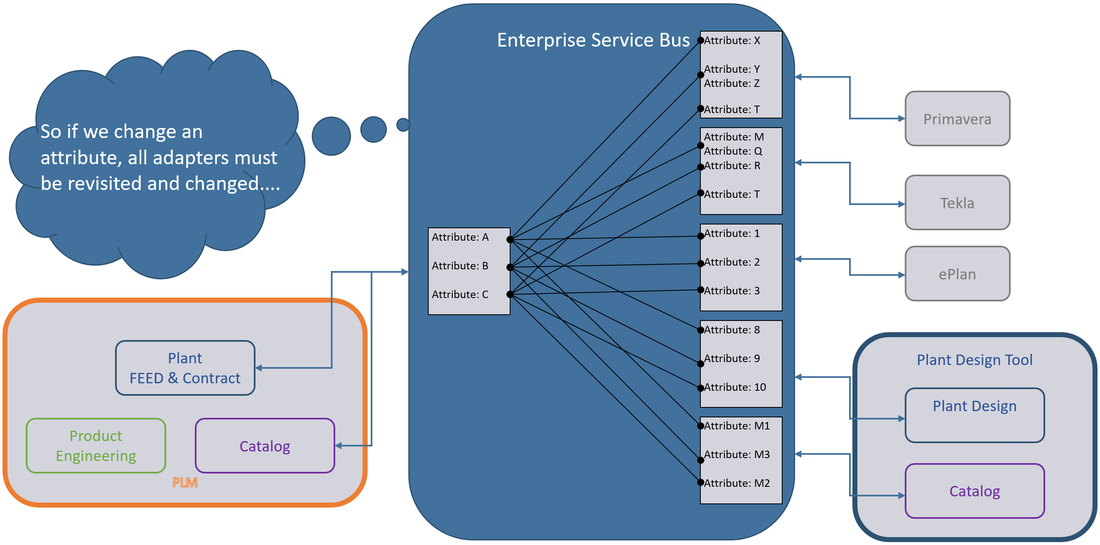

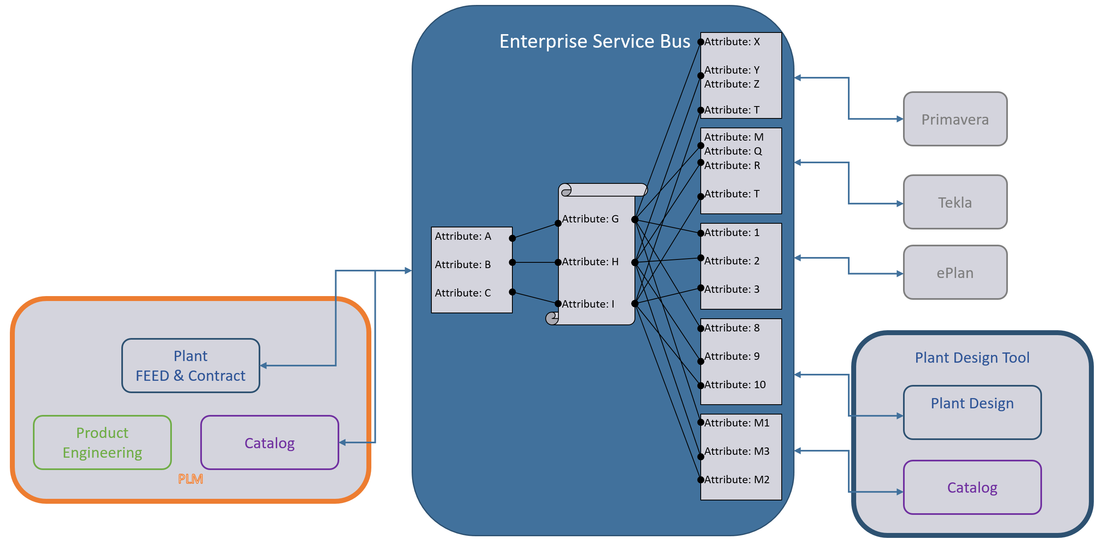

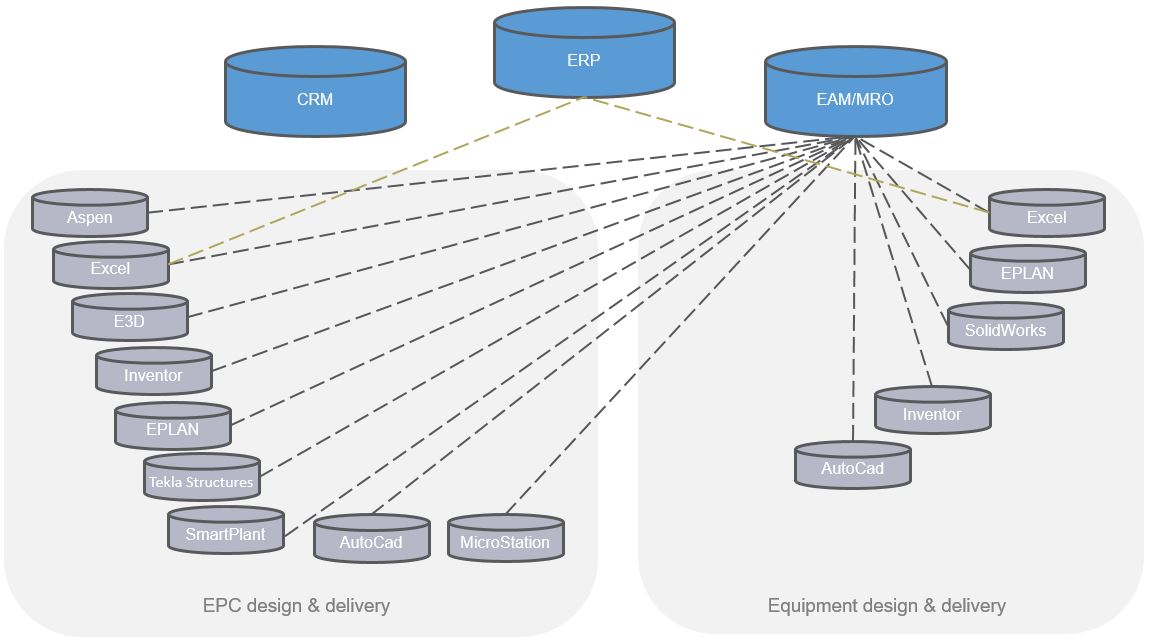

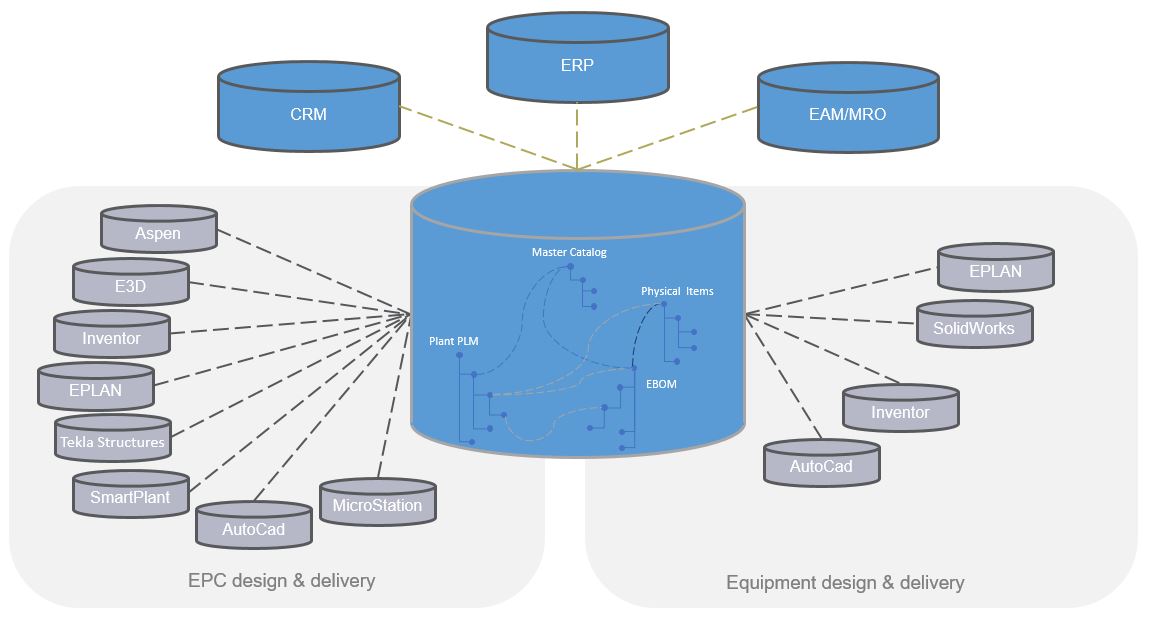

Because in order to get there, the underlying data must be connected, and in the form of… yes data as in objects, attributes and relationships. It requires a massive shift from document orientation to connected data orientation to be at its most effective.

On the bright side, several companies in very diverse industries have started this journey, and some are already starting to harvest the fruits of their adventure.

My advice to any company thinking about doing the same would be along the lines of:

When eating this particular elephant, do it one bite at the time, remember to swallow and let your organization digest between each bite.

Bjorn Fidjeland

The header image used in this post is by Elnur and purchased at dreamstime.com

RSS Feed

RSS Feed