The articles cover the motivation for doing as they did, and where their main focus was put in order to achieve their goals.

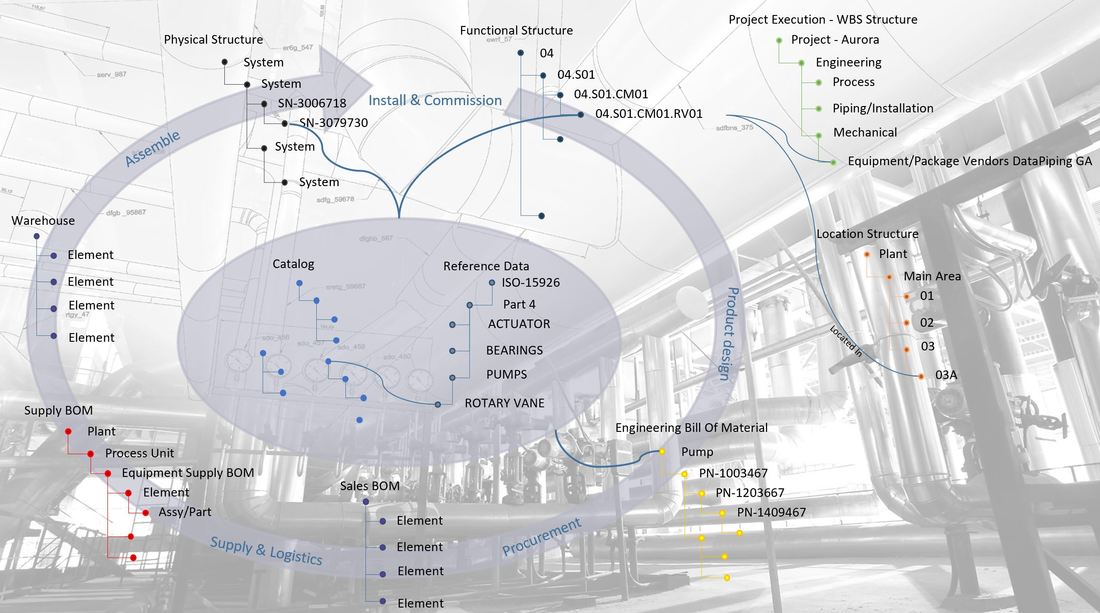

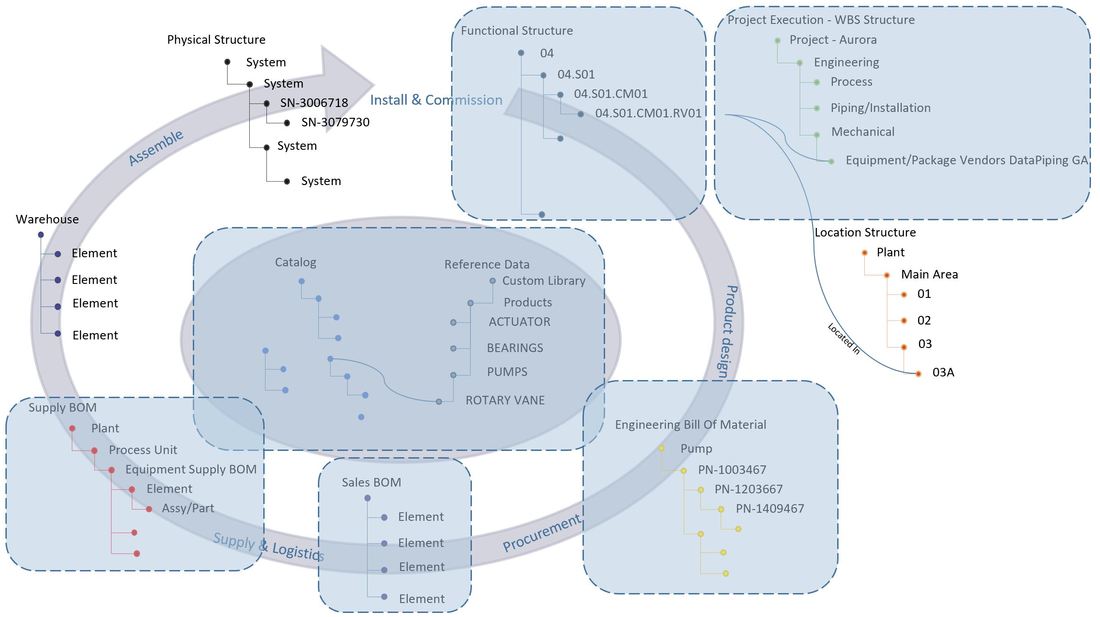

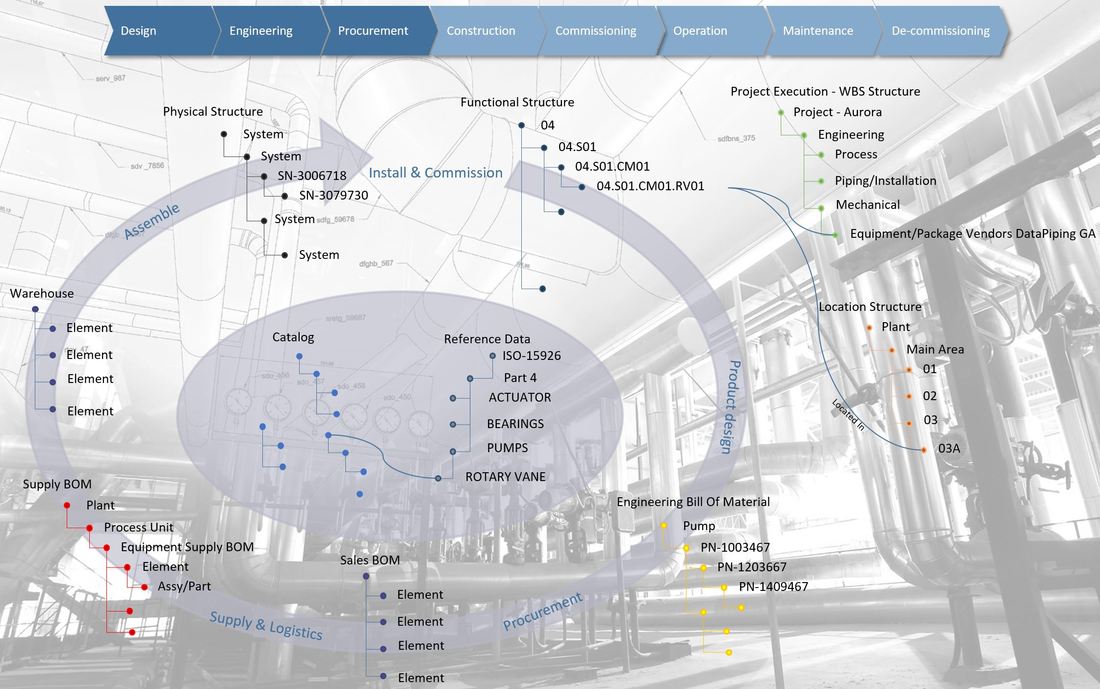

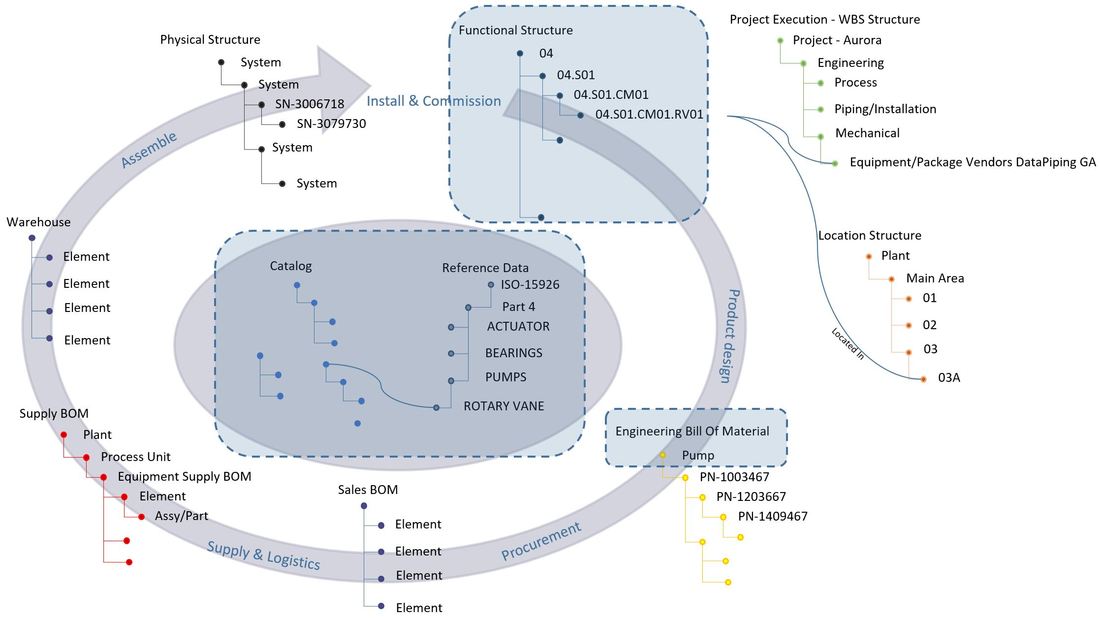

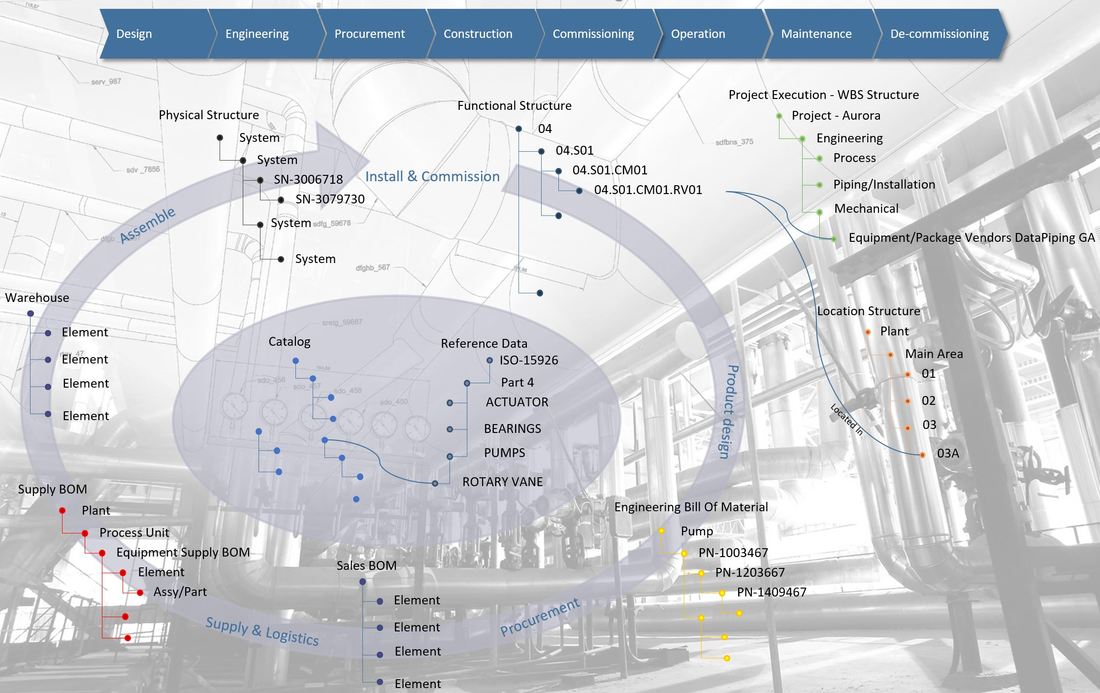

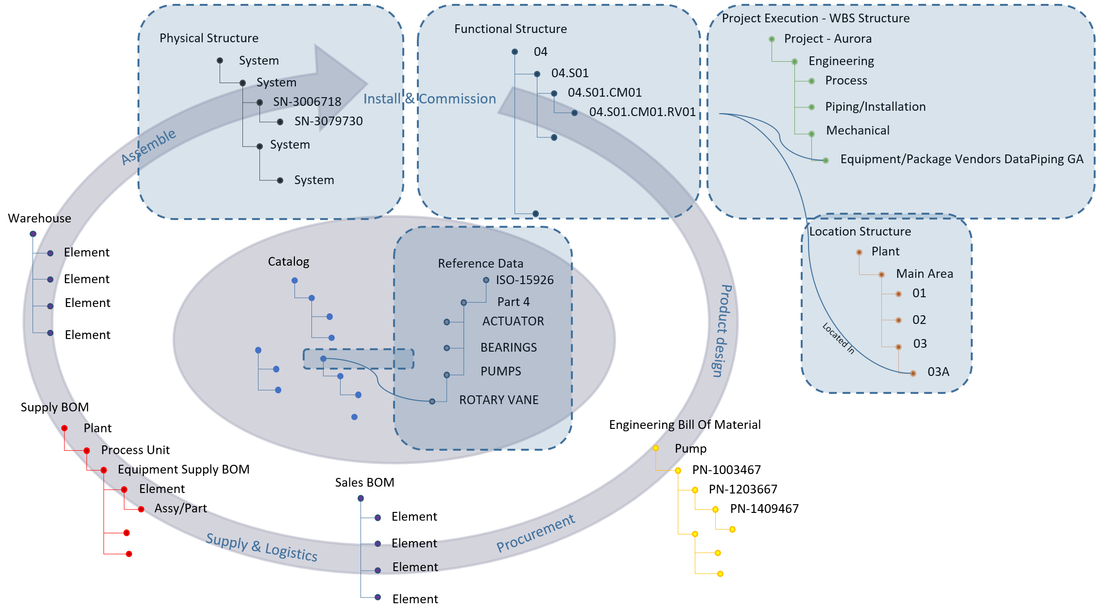

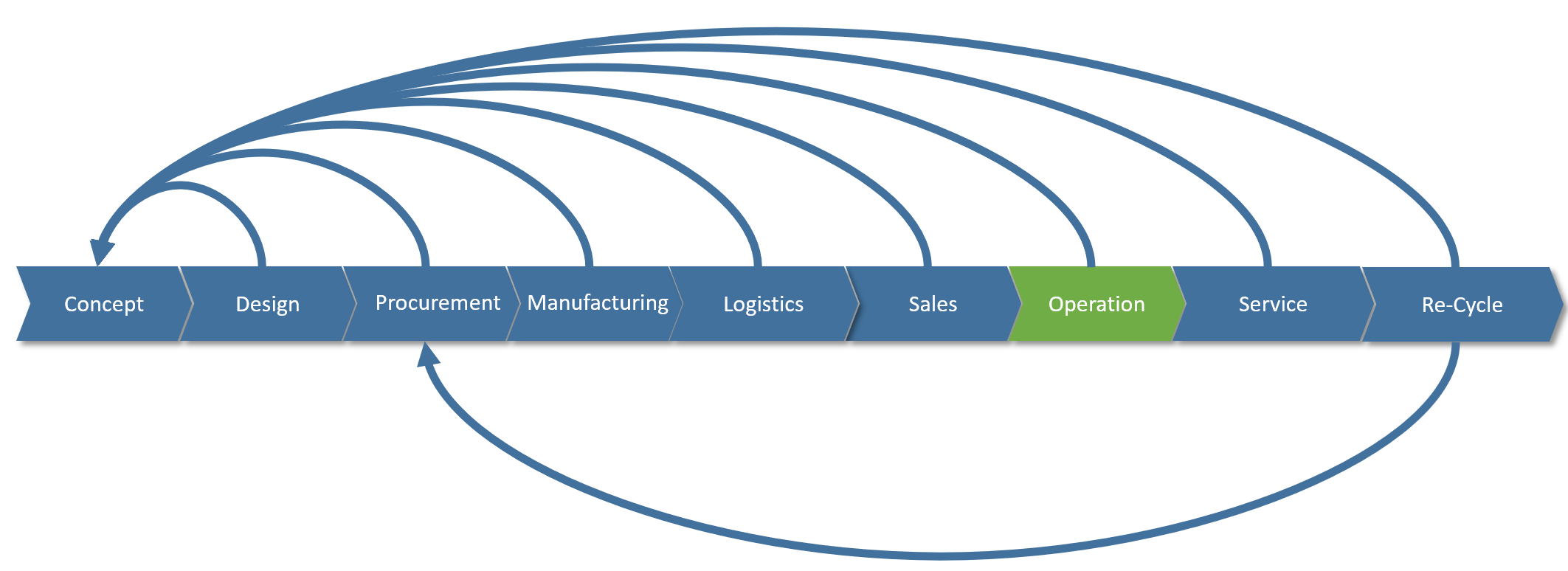

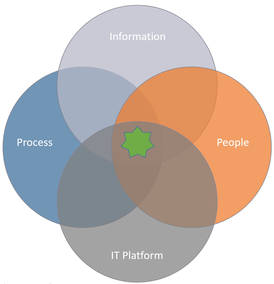

I will continue to use my information structure map, or the “circle of life” as a client jokingly called it, to explain where the different companies put their focus in terms of information management and why.

This company had the challenges of both EPC’s and product companies in ETO (Engineer To Order) projects as they owned several product companies, and naturally used a lot of their products in their EPC projects.

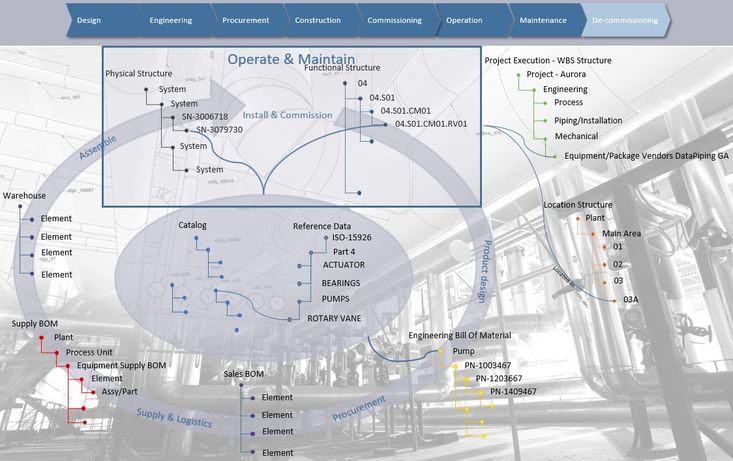

Their first objective was to respond to fearsome competition from other parts of the world who suddenly emerged on the global scene. In order to do so it was considered crucial to limit the amount of engineering hours used to gain projects. To achieve this, they decided to build a catalog of re-usable data structures with different perspectives (plant, product, execution) in order to promote a controlled re-use of both plant and product engineering data. Similarly, as for EPC 1 they recognized that standardization across disciplines would be necessary to make it all work. The reference/master data put in place for all disciplines to share was a proprietary company standard.

Secondly, they needed to replace a homegrown engineering data hub. This homegrown solution was very impressive indeed and contained a lot of functionality that commercial systems lack even today, however its architecture was built around processes that did no longer work as EPC 2 entered new markets.

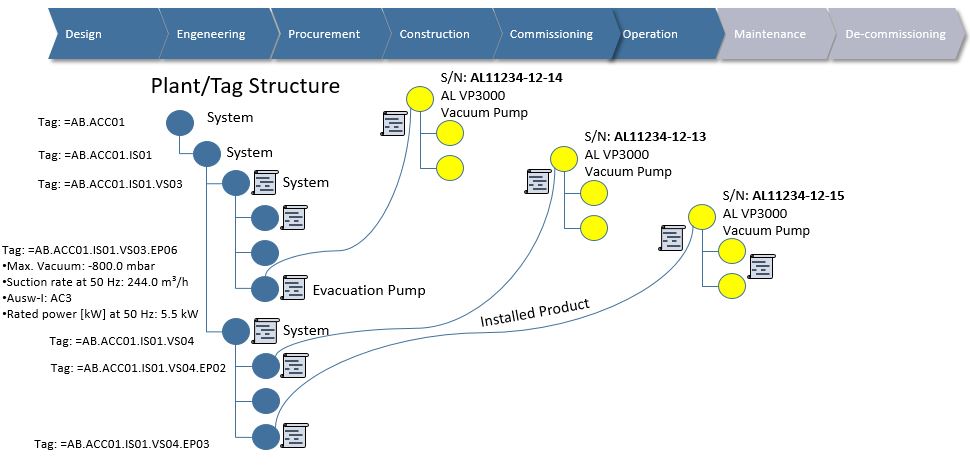

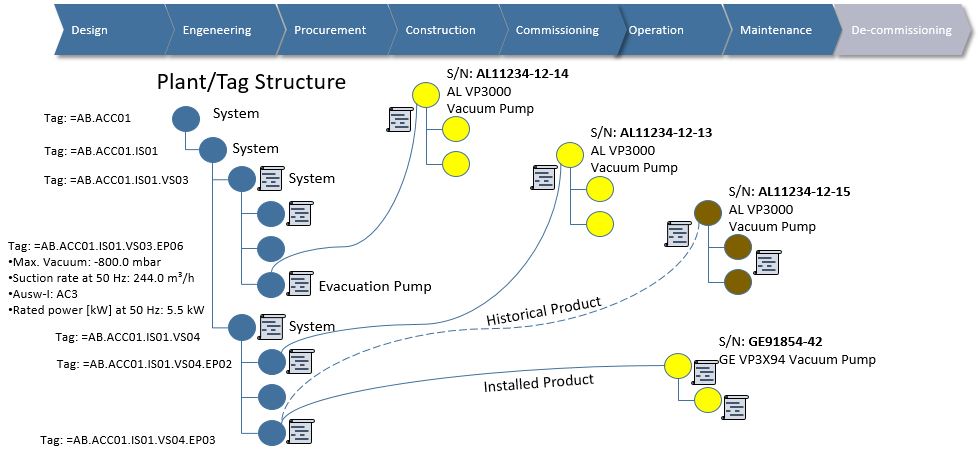

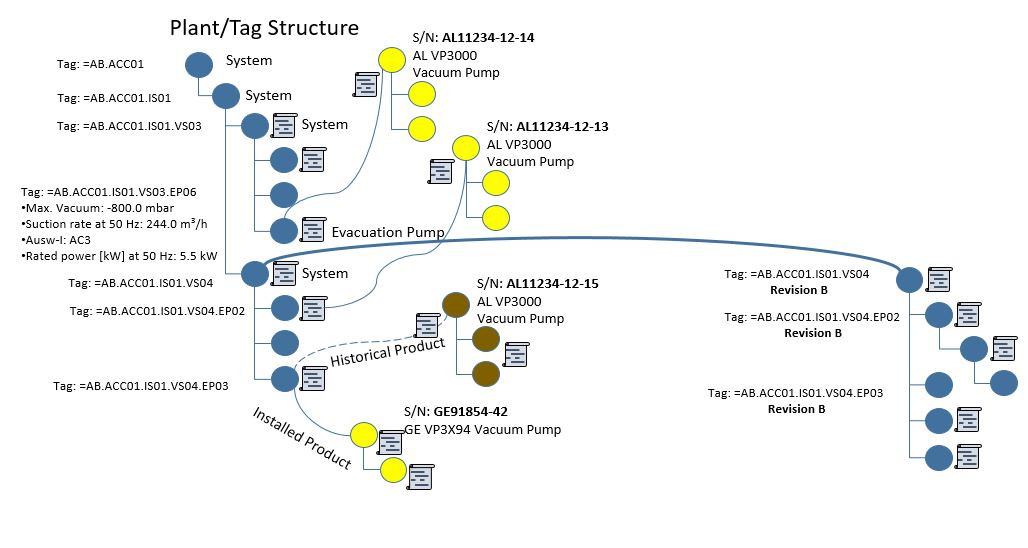

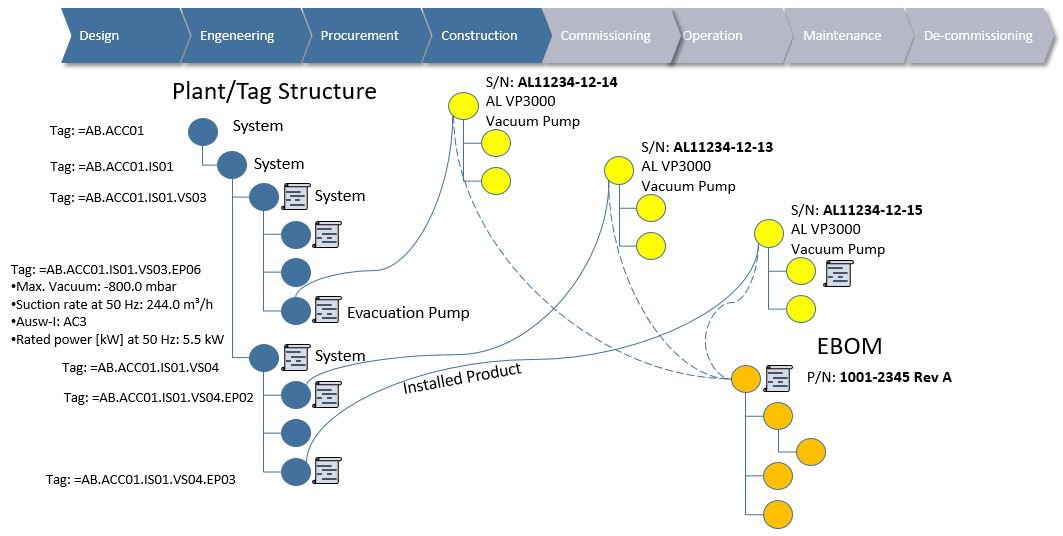

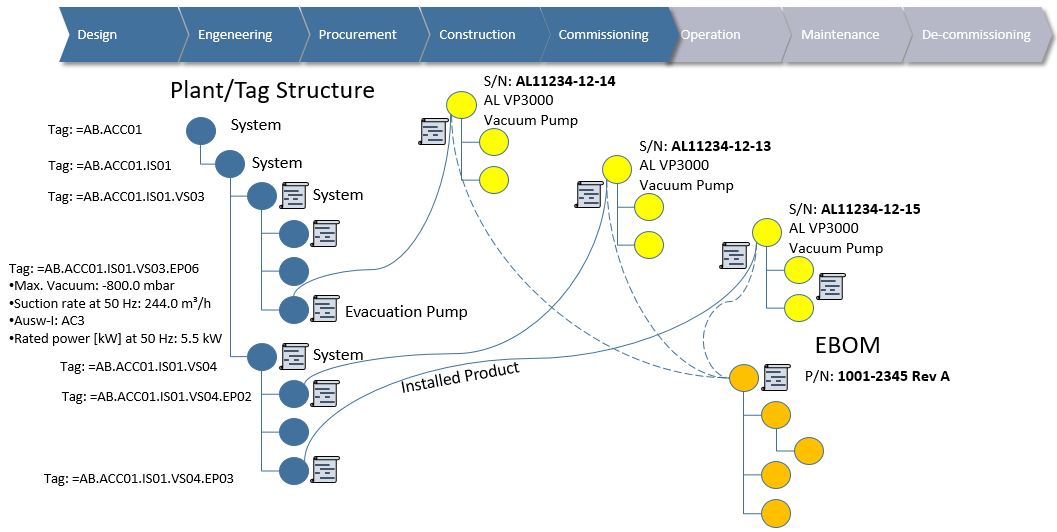

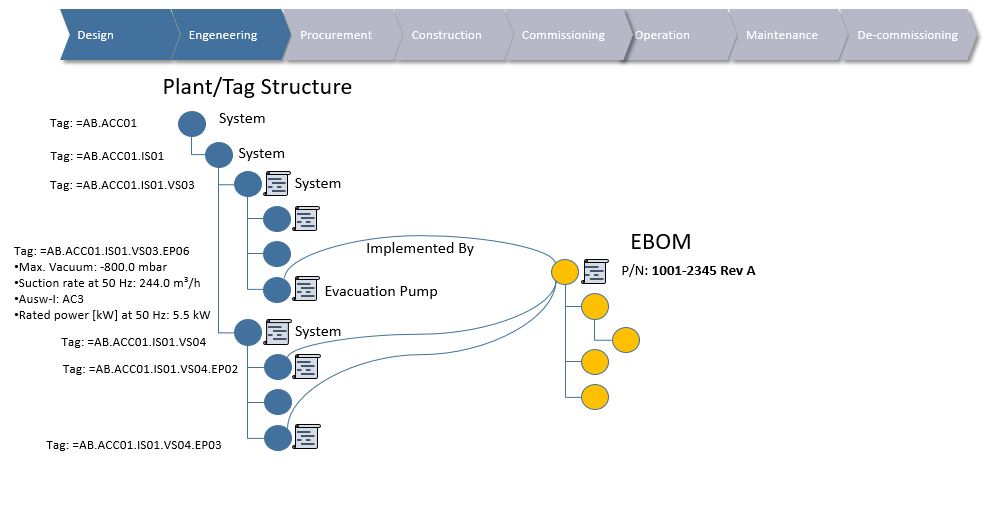

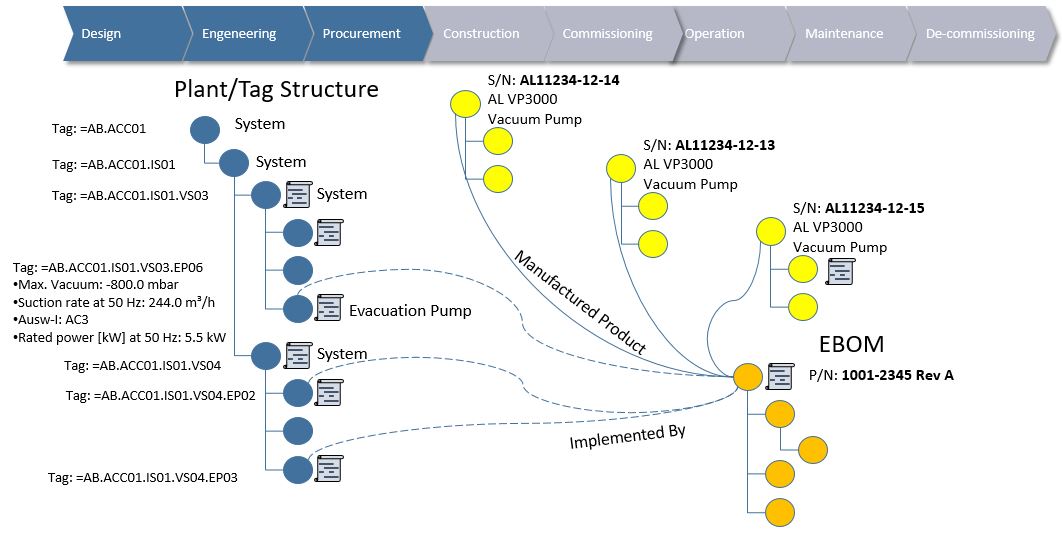

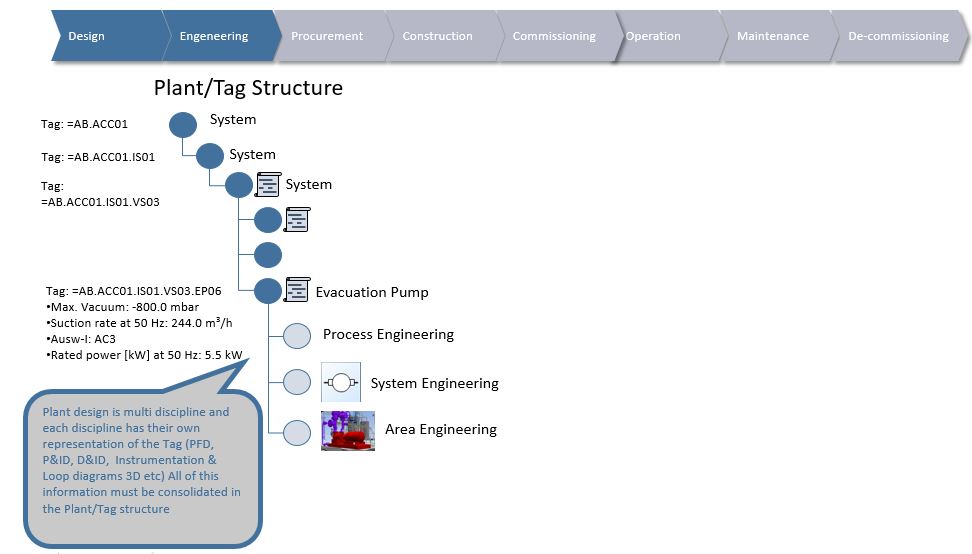

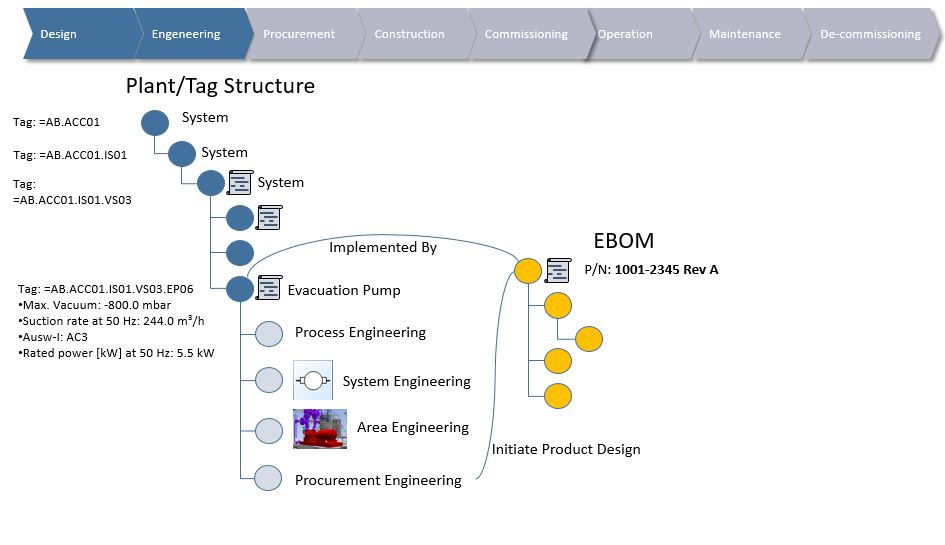

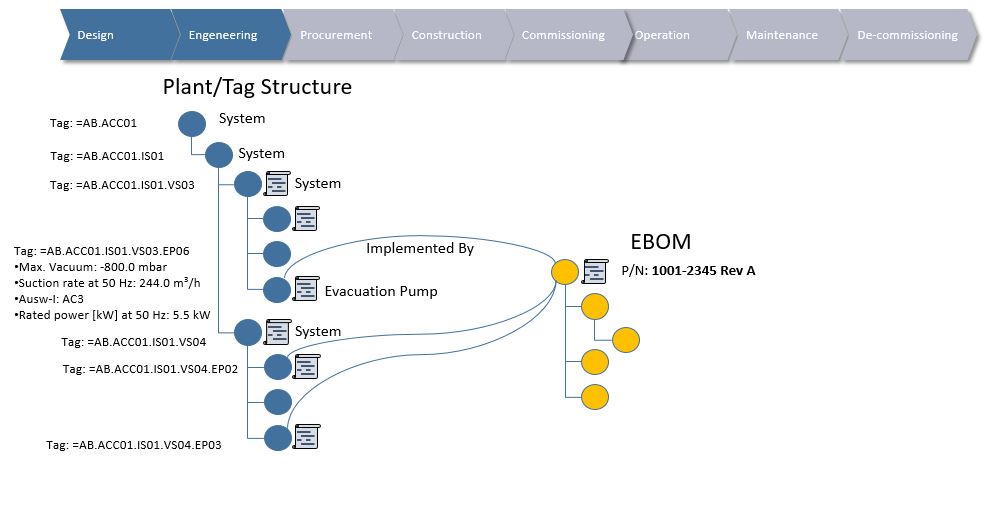

Thirdly they wanted to connect their plant engineering disciplines with the various product engineering disciplines throughout their own product companies worldwide. Naturally this meant run-time sharing and consolidation of data on a large scale. The emergence of the catalog with different aspects meant that plant engineering could pick systems and products from the catalog and have auto generated project specific tag information in the functional structure of their projects. It also meant that product engineering would be able to either generate a unique Engineer To Order bill of material if needed, or if plant engineering had not done any major modifications, link it to an already existing Engineering Bill of Material for the full product definition.

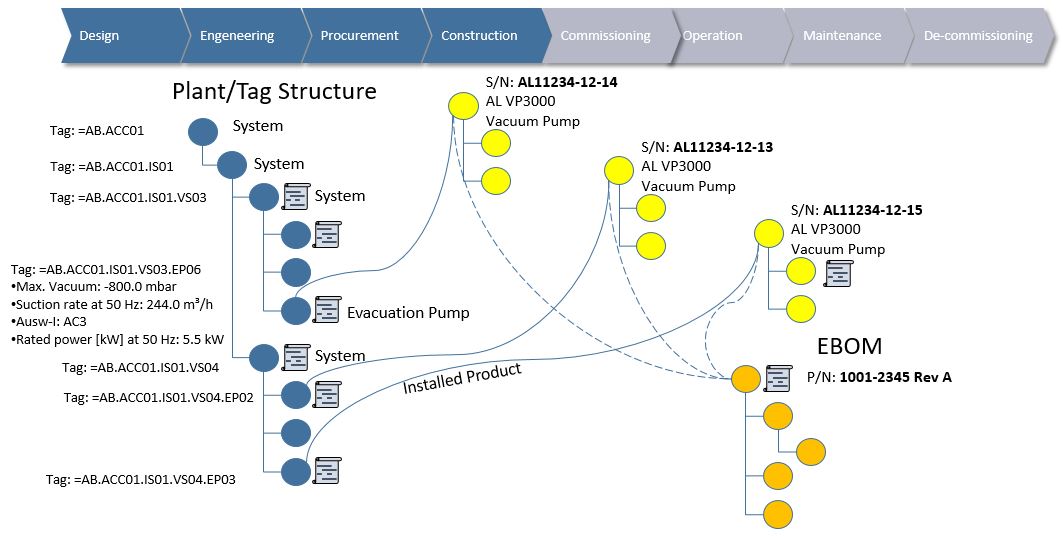

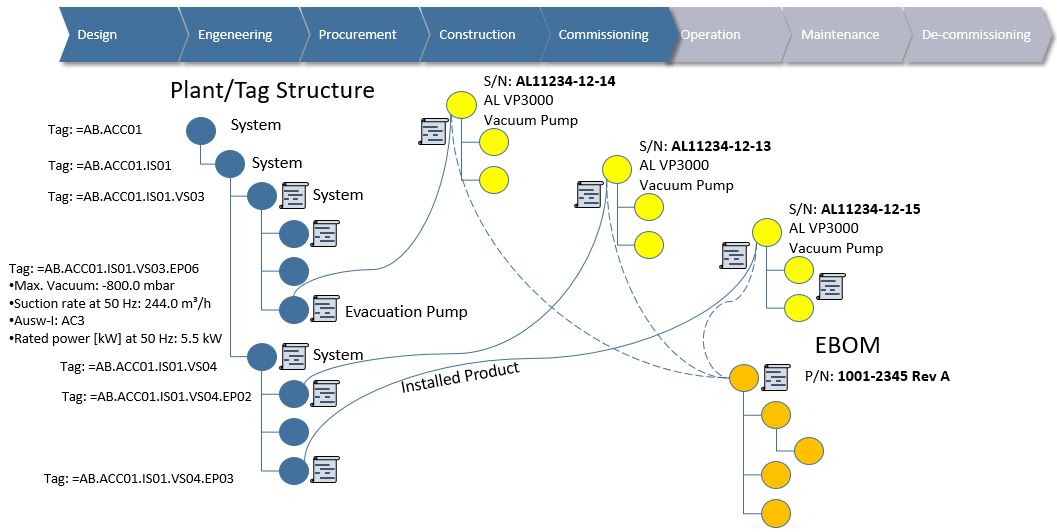

Their fourth objective was to obtain full traceability of changes across both plant and product engineering disciplines from FEED (Front End Engineering & Design) to delivered project. The reason for this objective was twofold. One part was to be able to prove to clients (operators) where changes originated from (largely from the client itself), and secondly to be able to measure what changes originated from their own engineering disciplines without project planning and execution knowing about it….. Does it sound familiar?

In order to achieve this, engineering data change management was enforced on both FEED functional design structures (yes, there could be several different design options for a project) and the functional structure in the actually executed EPC project. The agreed FEED functional structure was even locked and copied to serve as the starting point for the EPC project. At this point all data in the functional structure was released, subjected to full change management (meaning traceable Change Orders would be needed to change it) and made available to project planning and execution via integration.

Since product design and delivery was a large portion of their projects, the Engineering Bill of Material (EBOM) and variant management (the catalog structures) got a lot more focus compared with EPC 1 in my previous article. This was natural because, as mentioned, EPC 2 owned product companies and wanted to make a shift from Engineer To Order (ETO) towards more Configure To Order (CTO).

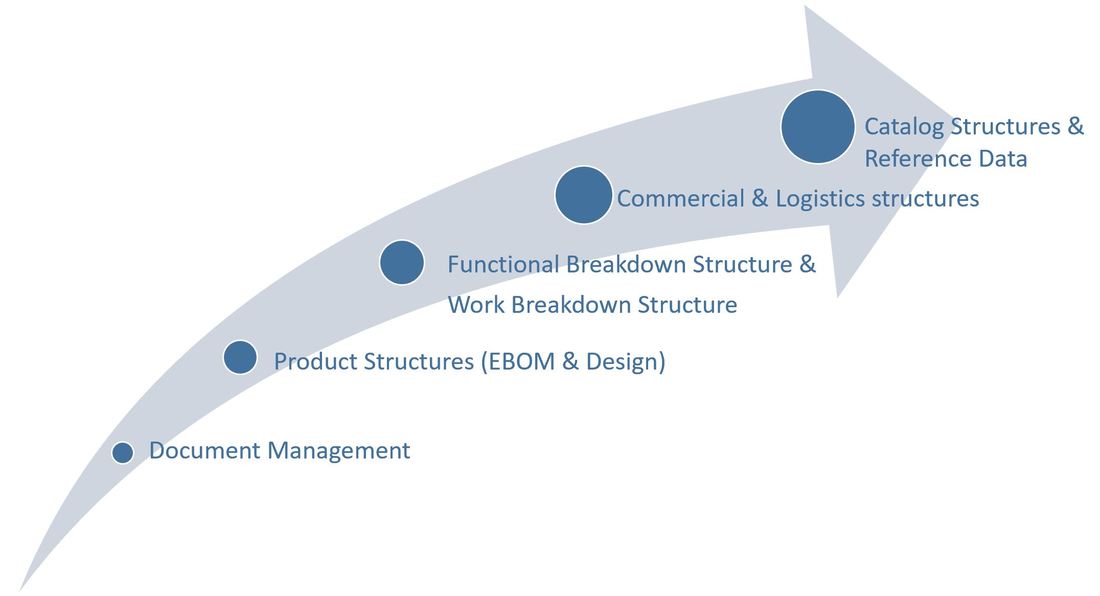

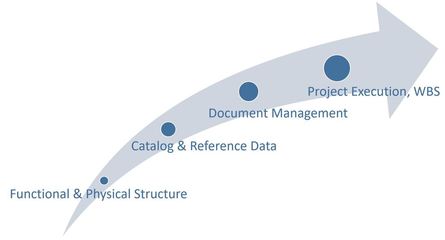

It was however decided to defer the catalog structures towards the end because they wanted to gain experience across the other aspects as well before starting to create the catalog itself.

The Functional Structure with the consolidated plant design, project specific data and associated documentation was out next together with the establishment of structures for project execution (WBS), estimation and control (Sales structure), and logistics (Supply structure).

Once the various data structures were in place, the focus was turned to “gluing it all together” with the re-usable catalog structures and the reference data which enabled interoperability across disciplines.

A more comprehensive overview explaining the different structures can be found in the article:

Plant Information Management - Information Structures, and further details regarding each information structure are discussed in:

Plant Engineering meets Product Engineering in capital projects

Handover to logistics and supply chain in capital projects

Plant Information Management - Installation and Commissioning

Plant Information Management – Operations and Maintenance

Bjorn Fidjeland

The header image used in this post is by 8vfand and purchased at dreamstime.com

RSS Feed

RSS Feed